speaker {

name 'Cédric Champeau'

company 'Gradle Inc'

oss 'Apache Groovy committer',

successes 'Static type checker',

'Static compilation',

'Traits',

'Markup template engine',

'DSLs'

failures Stream.of(bugs),

twitter '@CedricChampeau',

github 'melix',

extraDescription '''Groovy in Action 2 co-author

Misc OSS contribs (Gradle plugins, deck2pdf, jlangdetect, ...)'''

}Minutes to seconds, maximizing incrementality

Cédric Champeau (@CedricChampeau), Gradle

Who am I

Agenda

Incremental builds

Compile avoidance

Incremental compilation

Variant-aware dependency management

Incremental builds

Why does it matter?

Gradle is meant for incremental builds

cleanis a waste of timeTime is $$$

Gradle team

~30 developers

~20000 builds per week

1 min saved means 333 hours/week!

The incrementality test

Run a build

Run again with no change

If a task was re-executed, you got it wrong

Properly writing tasks

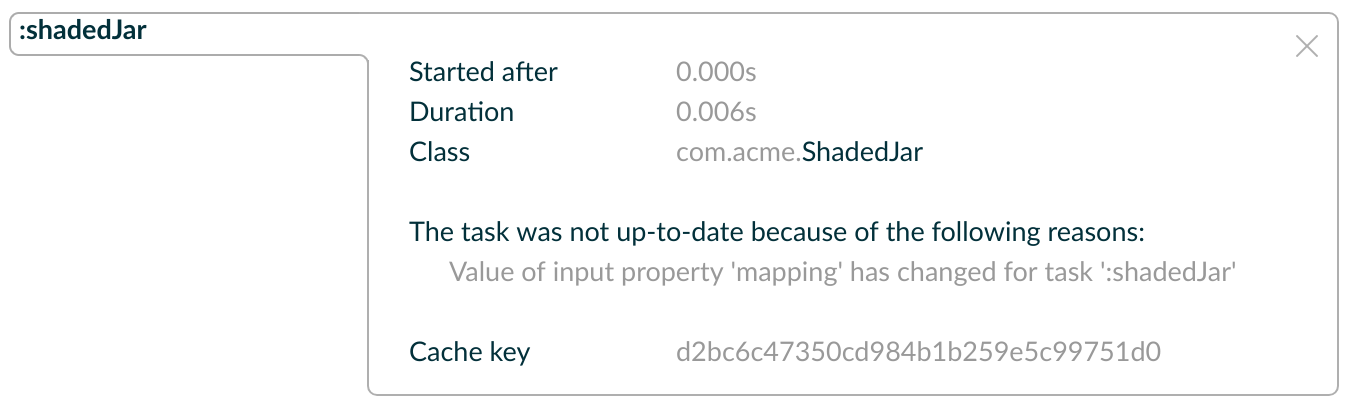

Example: building a shaded jar

task shadedJar(type: ShadedJar) {

jarFile = file("$buildDir/libs/shaded.jar")

classpath = configurations.runtime

mapping = ['org.apache': 'shaded.org.apache']

}What are the task inputs?

What are the task outputs?

What if one of them changes?

Declaring inputs

public class ShadedJar extends DefaultTask {

...

@InputFiles

FileCollection getClasspath() { ... }

@Input

Map<String, String> getMapping() { ... }

}Declaring outputs

public class ShadedJar extends DefaultTask {

...

@OutputFile

File getJarFile() { ... }

}Know why your task is out-of-date

Incremental task inputs

Know precisely which files have changed

Task action can perform the minimal amount of work

Incremental task inputs

@TaskAction

public void execute(IncrementalTaskInputs inputs) {

if (!inputs.isIncremental()) {

// clean build, for example

// ...

} else {

inputs.outOfDate(change ->

if (change.isAdded()) {

...

} else if (change.isRemoved()) {

...

} else {

...

}

});

}

}Compile avoidance

Compile classpath leakage

A typical dependency graph

Cascading recompilation

Cascading recompilation

But also with side effects:

compile dependencies leak to the downstream consumers

hard to upgrade dependencies without breaking clients

Separating API and implementation

Example

import com.acme.model.Person;

import com.google.common.collect.ImmutableSet;

import com.google.common.collect.Iterables;

...

public Set<String> getNames(Set<Person> persons) {

return ImmutableSet.copyOf(Iterables.transform(persons, TO_NAME))

}Before Gradle 3.4

apply plugin: 'java'

dependencies {

compile project(':model')

compile 'com.google.guava:guava:18.0'

}But…

// exported dependency

import com.acme.model.Person;

// internal dependencies

import com.google.common.collect.ImmutableSet;

import com.google.common.collect.Iterables;

...

public Set<String> getNames(Set<Person> persons) {

return ImmutableSet.copyOf(

Iterables.transform(persons, TO_NAME))

}Starting from Gradle 3.4

// This component has an API and an implementation

apply plugin: 'java-library'

dependencies {

api project(':model')

implementation 'com.google.guava:guava:18.0'

}API vs impl graph

Change to impl dependency

Change to API dependency

Consumers are not equal

Compile classpath

What does a compiler care about?

Input: jars, or class directories

Jar: class files

Class file: both API and implementation

Compile classpath

What we provide to the compiler

public class Foo {

private int x = 123;

public int getX() { return x; }

public int getSquaredX() { return x * x; }

}Compile classpath

What the compiler cares about:

public class Foo {

public int getX()

public int getSquaredX()

}Compile classpath

But it could also be

public class Foo {

public int getSquaredX()

public int getX()

}only public signatures matter

Compile classpath snapshotting

Compute a hash of the signature of class :

aedb00fdCombine hashes of all classes :

e45bdc17Combine hashes of all input on classpath:

4500fc1Result: hash of the compile classpath

Only consists of what is relevant to the

javaccompiler

Runtime classpath

What does the runtime care about?

Runtime classpath

What does the runtime care about:

public class Foo {

private int x = 123;

public int getX() { return x; }

public int getSquaredX() { return x * x; }

}At runtime, everything matters, from classes to resources.

Compile vs runtime classpath

In practice:

@InputFiles

@CompileClasspath

FileCollection getCompileClasspath() { ... }

@InputFiles

@Classpath

FileCollection getRuntimeClasspath() { ... }Compile avoidance

compile and runtime classpath have different semantics

Gradle makes the difference

Ignores irrelevant (non ABI) changes to compile classpath

Effect on recompilations

Icing on the cake

Upgrade a dependency from

1.0.1to1.0.2If ABI hasn’t changed, Gradle will not recompile

Even if the name of the jar is different (

mydep-1.0.1.jarvsmydep-1.0.2.jar)Because only contents matter

Incremental compilation

Basics

Given a set of source files

Only compile the files which have changed…

and their dependencies

Language specific

Gradle has support for incremental compilation of Java

compileJava {

//enable incremental compilation

options.incremental = true

}| Kotlin plugin implements its own incremental compilation |

In practice

import org.apache.commons.math3.complex.Complex;

public class Library {

public Complex someLibraryMethod() {

return Complex.I;

}

}Complexis a dependency ofLibraryif

Complexis changed, we need to recompileLibraryif

ComplexUtilsis changed, no need to recompile

Gotcha

import org.apache.commons.math3.dfp.Dfp;

public class LibraryUtils {

public static int getMaxExp() {

return Dfp.MAX_EXP;

}

}Dfpis a dependency ofLibraryUtilsso if

MAX_EXPchanges, we should recompileLibraryUtils, right?

Wait a minute…

javap -v build/classes/java/main/LibraryUtils.class

...

public static int getMaxExp();

descriptor: ()I

flags: ACC_PUBLIC, ACC_STATIC

Code:

stack=1, locals=0, args_size=0

0: ldc #3 // int 32768

2: ireturnreference to

Dfpis gone!compiler inlines some constants

JLS says compiler doesn’t have to add the dependent class to constant pool

What Gradle does

Analyze all bytecode of all classes

Record which constants are used in which file

Whenever a producer changes, check if a constant changed

If yes, recompile everything

Annotation processors

Disable incremental compilation (working on it!)

Implementation of the annotation processors matter at compile time

Don’t add annotation processors to compile classpath

or we cannot use smart classpath snapshotting

Annotation processors

Use annotationProcessorPath:

configurations {

apt

}

dependencies {

// The dagger compiler and its transitive dependencies will only be found on annotation processing classpath

apt 'com.google.dagger:dagger-compiler:2.8'

// And we still need the Dagger annotations on the compile classpath itself

compileOnly 'com.google.dagger:dagger:2.8'

}

compileJava {

options.annotationProcessorPath = configurations.apt

}Variant aware dependency management

Producer vs consumer

A

consumerdepends on aproducerThere are multiple requirements

What is required to compile against a

producer?What is required at runtime for a specific configuration?

What artifacts does the producer offer?

Is the

producera sub-project or an external component?

What do you need to compile against a component?

Class files

Can be found in different forms:

class directories

jars

aars, …

Question: do we need to build a jar of the producer if all we want is to compile against it?

Discriminate thanks to usage

Give me something that I can use to compile

Discriminate thanks to usage

Sure, here’s a jar

Discriminate thanks to usage

But we can be finer:

Sure, here’s a class directory

Discriminate thanks to usage

Or smarter:

mmm, all I have is an AAR, but don’t worry, I know how to transform it to something you can use for compile

The Java Library Plugin

will provide consumers with a class directory for compile

will provide consumers with a jar for runtime

As a consequence:

only

classestask will be triggerred when compilingjar(and thereforeprocessResources) only triggerred when needed at runtime

Conclusion

Use the Java Library Plugin!

Slides: https://melix.github.io/javaone-2017-max-incremental Discuss: @CedricChampeau