Blog

Extraction automatique d’images Hélium avec JSol’Ex

14 June 2024

Tags: solex jsolex solaire astronomie

Dans ce billet, je vais prendre exemple d’un cas d’utilisation particulier, l’extraction d’images Hélium, pour expliquer comment j’envisage le développement de JSol’Ex, dans l’optique de simplifier, d’une version à une autre, son exploitation par les utilisateurs.

Je rappelle à toutes fins utiles que le logiciel officiel n’est pas JSol’Ex mais bien INTI de Valérie Desnoux.

Principe du Sol’Ex

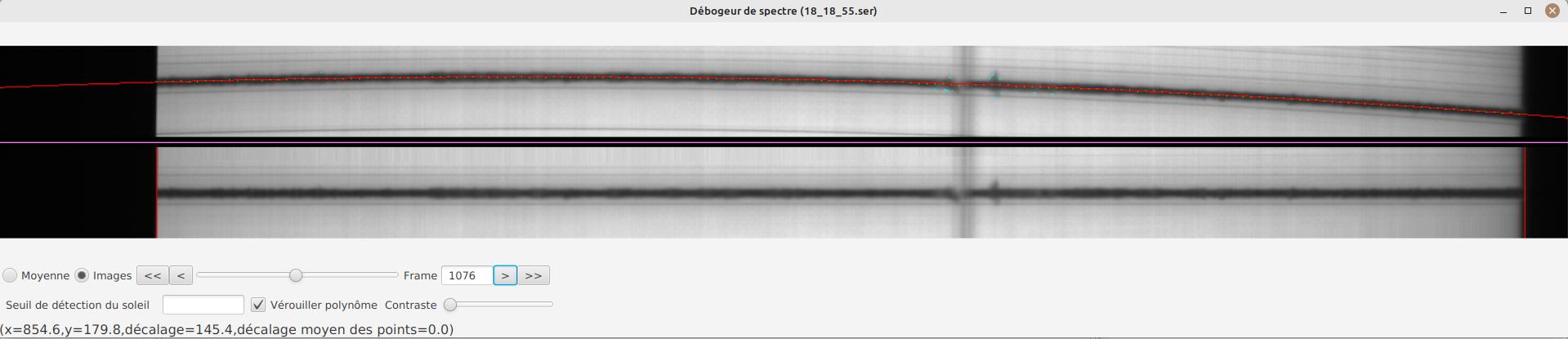

Rappelons tout d’abord le principe de base du Sol’Ex de Christian Buil. Le concept requiert de reconstituer une image du soleil, ligne par ligne, en exploitant une vidéo dont chaque trame montre une partie du spectre solaire, centré sur une raie particulière que l’on étudie. La raie la plus communément étudiée est la raie H-alpha, qui, sur le spectre solaire, apparaît en absorption, c’est à dire qu’elle sera une ligne sombre sur une image du spectre :

En haut, l’image telle qu’on la trouve dans la vidéo, la ligne est "déformée". En bas, l’image "corrigée". Les points bleus correspondent à la détection de l’effet Doppler, les lignes sombres verticales correspondent à des taches solaires.

Les logiciels comme INTI et JSol’Ex exploitent le fait que la ligne étudiée est la plus sombre de l’image pour se repérer. Un des aspects fondamentaux de la reconstruction que je n’avais honnêtement pas compris lorsque je me suis lancé dans ce projet, c’est que tout le signal permettant de recomposer l’image H-alpha se trouve dans cette ligne sombre. En effet, même si elle paraît "noire", il y a en fait bien d’infimes variations dans cette ligne, et c’est celà qui nous sert à reconstituer une image !

|

Tip

|

8 bits vs 16 bits

Cet aspect explique l’importance d’utiliser une acquisition en 16 bits et non en 8-bits.

En effet, si l’acquisition est faite en 8 bits, on ne dispose que de 256 valeurs possibles pour encoder l’ensemble de la dynamique de l’image.

Si le signal ne se trouve qu’au centre de la raie, on comprend vite qu’on ne dispose alors que d’un nombre très limité de valeurs pour ce qui se passe dans cette simple région.

En utilisant 16 bits, on augmente sensiblement notre capacité à encoder des valeurs de niveaux différents dans le centre de la raie, puisqu’on dispose cette fois de 65536 valeurs possibles pour l’ensemble de l’image !

|

Le cas des raies Hélium

La détection de raies sombres permet, si la fenêtre de cropping du spectre est relativement petite, de se concentrer sur une raie à étudier. Ca fonctionne très bien pour les raies H-alpha, Magnésium, Calcium K ou H, etc, qui sont des raies en absorption.

En revanche, la raie Hélium est une raie en émission : elle est "claire" au lieu d’être sombre, mais elle est aussi impossible à distinguer dans la plupart des trames parce que noyée dans le flux. Aussi les logiciels ne peuvent pas la trouver.

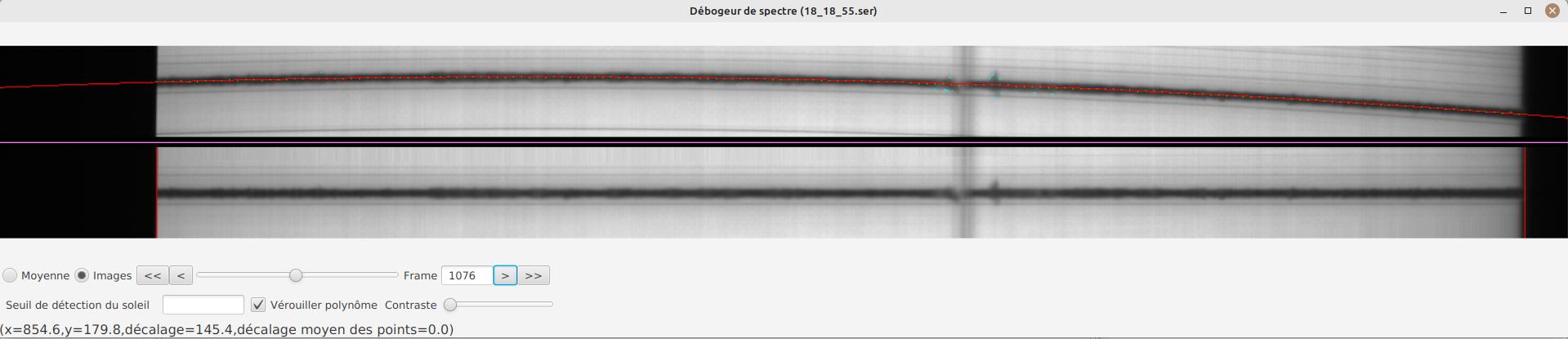

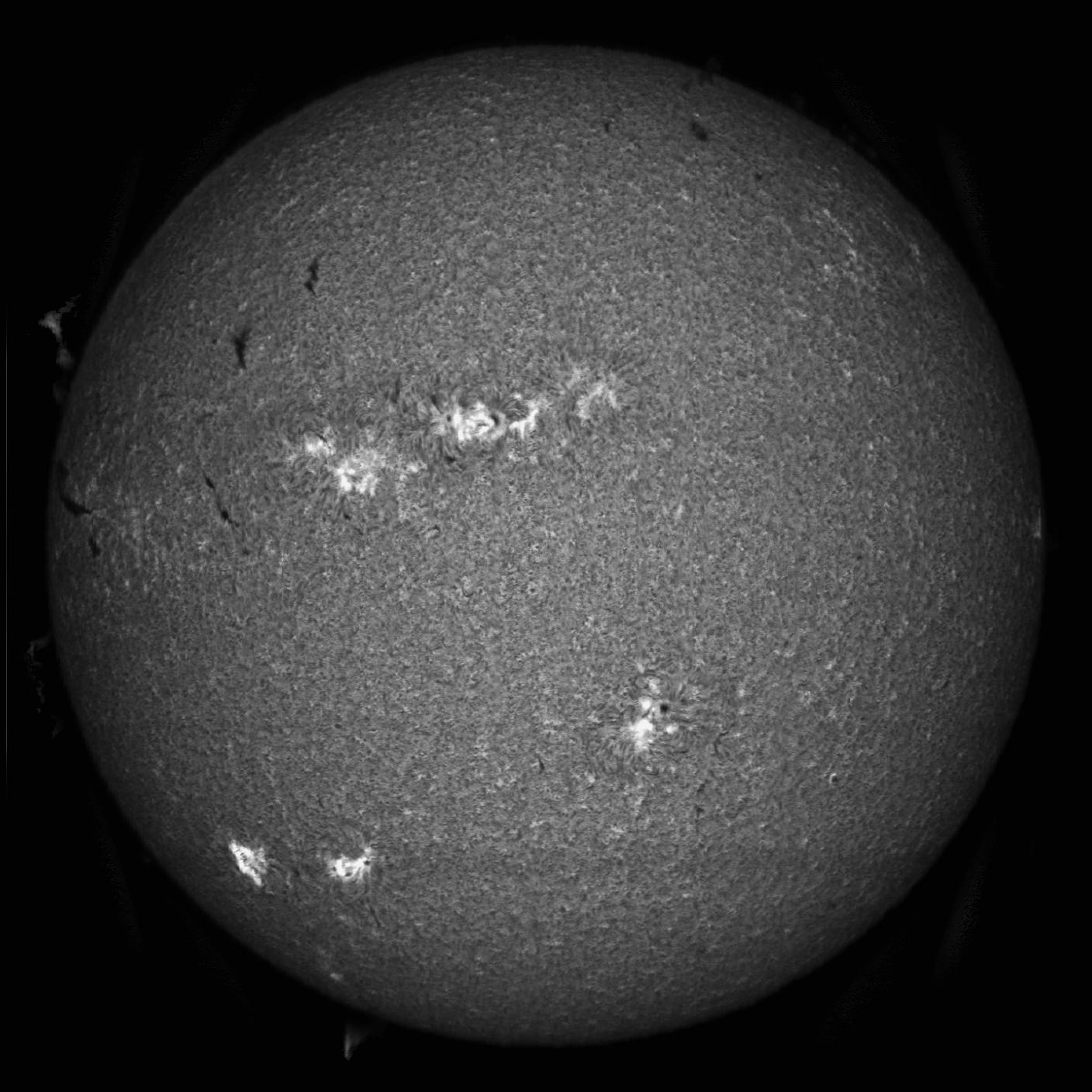

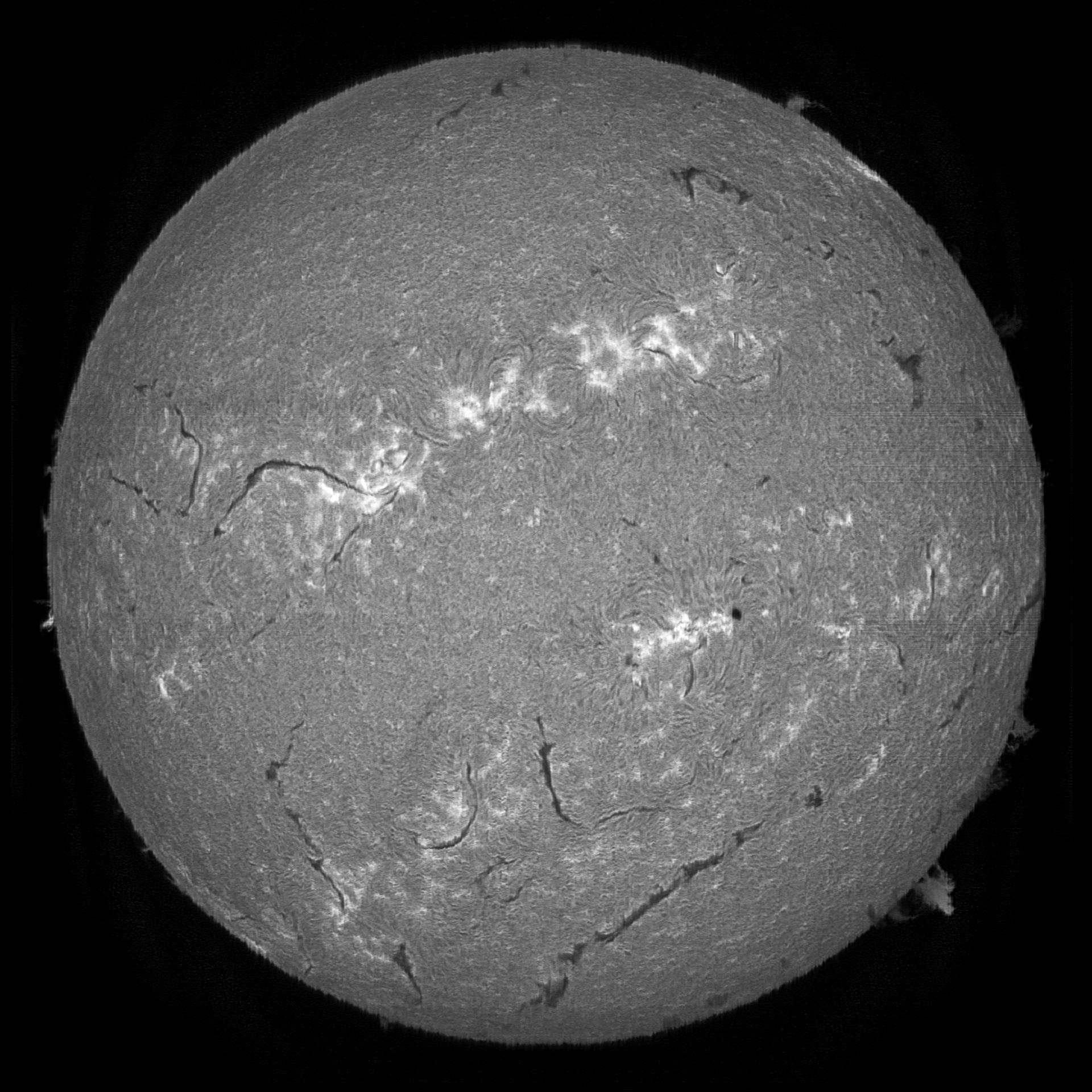

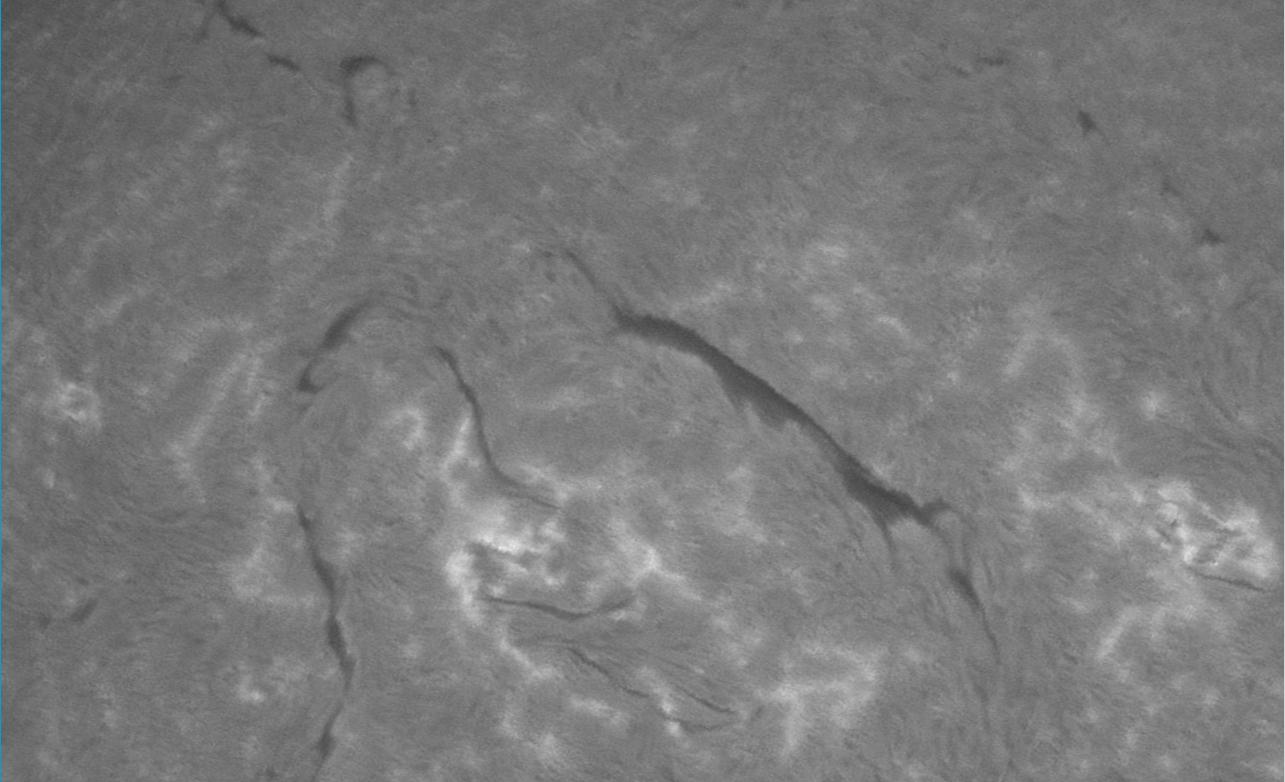

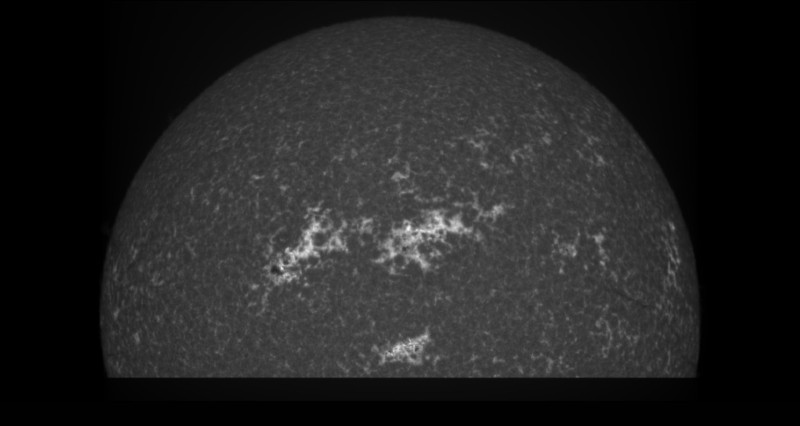

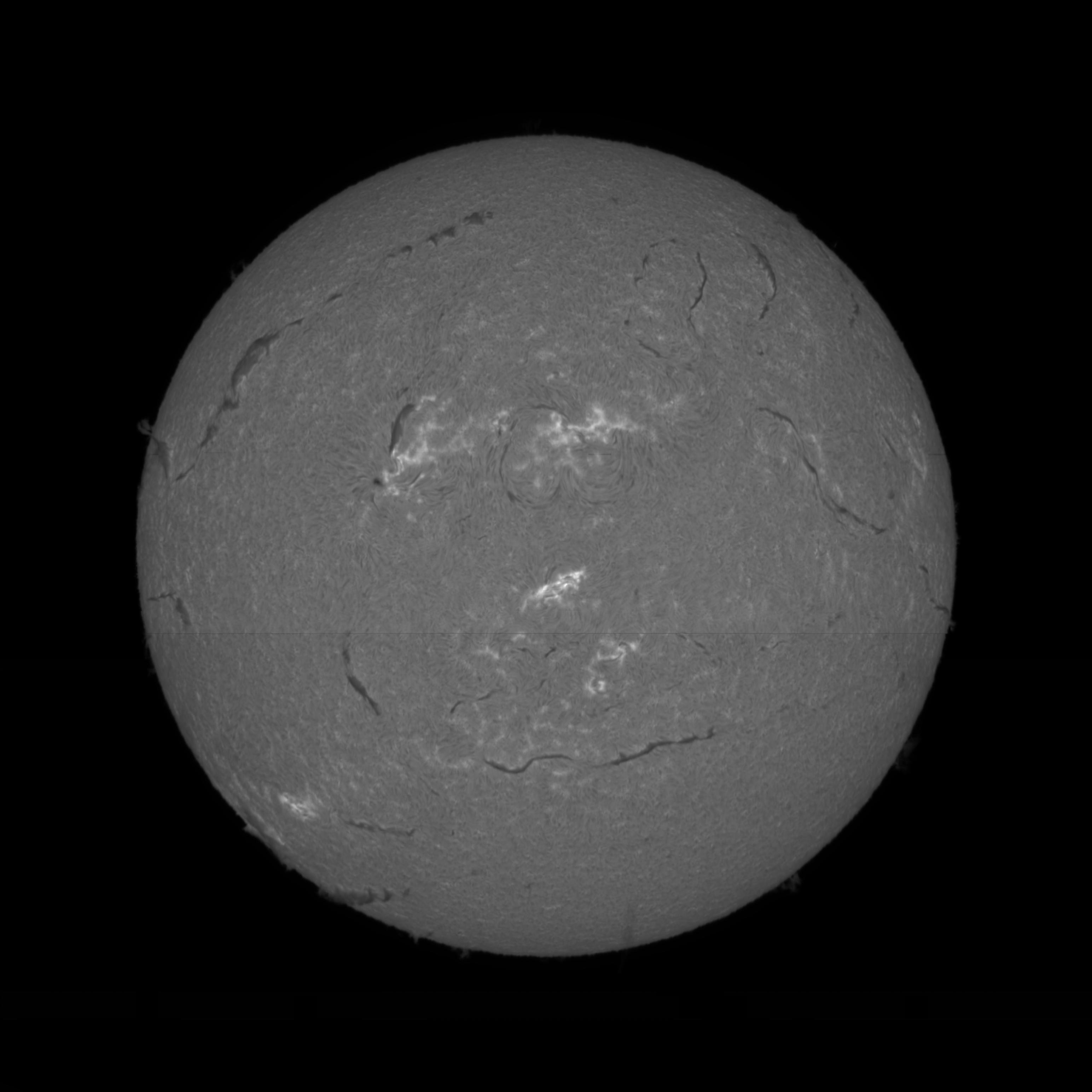

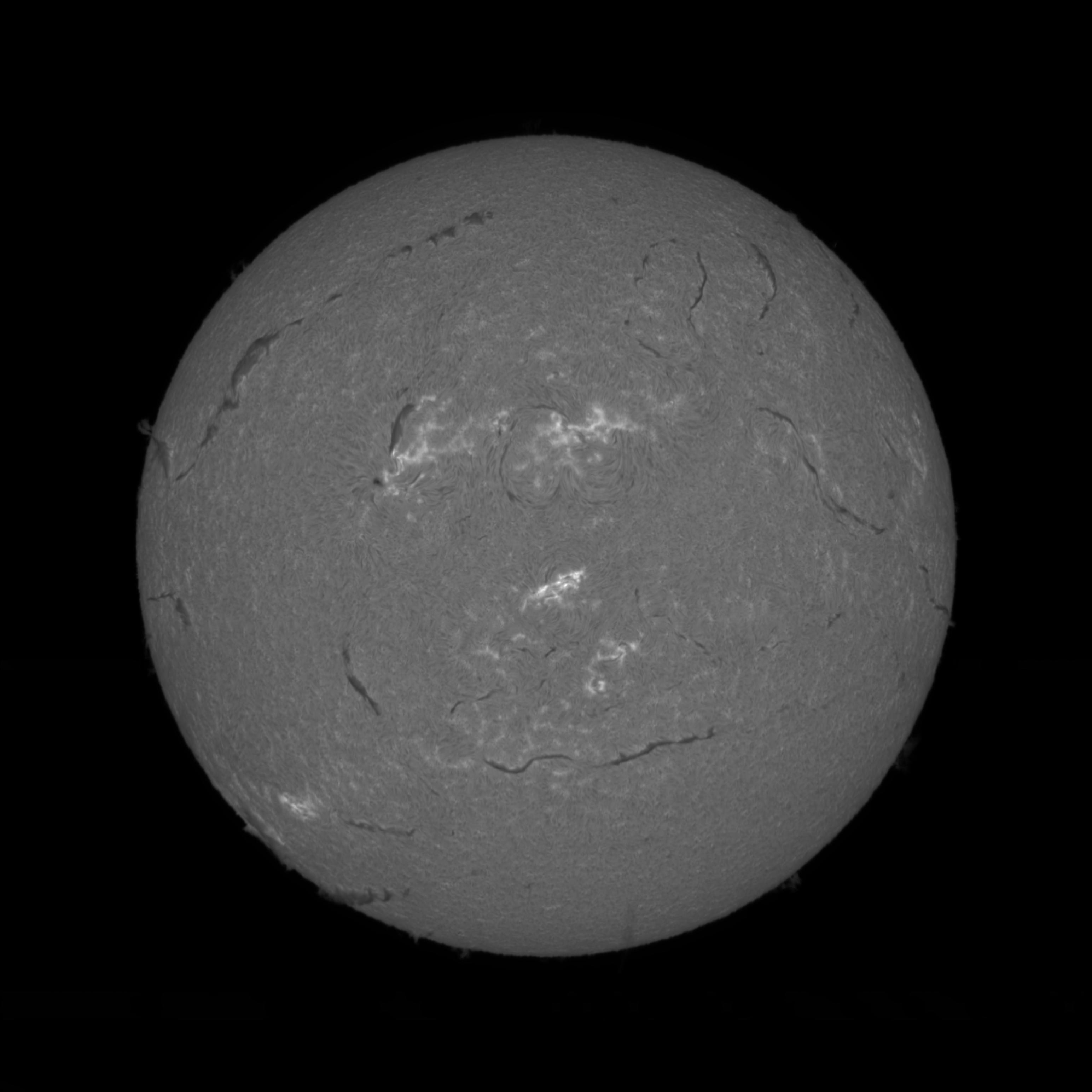

Alors, comment fait JSol’Ex, dans ses dernières versions, pour être capable de produire en 1 clic une image Hélium comme celle ci-dessous ? La question est intéressante non seulement d’un point de vue technique, mais aussi pour comprendre la façon dont j’aborde le développement de JSol’Ex.

Le site de Christian Buil est une nouvelle mine d’information pour nous aider : pour pouvoir produire une telle image, il est nécessaire de prendre une raie sombre comme référence, puis d’appliquer un "décalage de pixels" pour "trouver" la raie Hélium. Enfin, il est nécessaire de procéder à une soustraction du continuum (une 2ème image).

Cette notion de décalage de pixels est bien connue des utilisateurs chevronnés du Sol’Ex. Le principe est relativement simple : au lieu de reconstituer une image en utilisant le centre de la raie détectée (la ligne rouge dans l’image spectrale ci-dessus), on va reconstituer une image en se décalant vers le haut ou vers le bas de quelques pixels. C’est ce même principe qui permet de créer des images Doppler du soleil, qui montrent en bleu les régions qui s’approchent de nous et en rouge celles qui s’éloignent. On peut même produire des timelapses assez spectaculaires comme cette animation que j’ai réalisée le 5 juin 2024 et qui est générée par le logiciel en quelques minutes :

Comme Christian l’explique dans cette vidéo, trouver ce décalage de pixels n’est pas forcément chose simple. Tout d’abord, il est nécessaire de trouver la raie "sombre" de référence : on utilisera traditionellement le doublet du sodium pour se répérer, puis on utilisera la raie Sodium D2 ou encore la raie Fe I présente à côté comme référence.

Ensuite, il faut trouver ce fameux décalage de pixels pour "tomber" sur la raie Hélium. La vidéo de Christian ne montre pas les dernières améliorations que Valérie Desnoux a intégré à INTI pour rendre les choses plus simples, mais, grossièrement, il faut :

-

faire une capture surexposée qui va nous permettre de "voir" la raie hélium

-

faire une capture "normale" pour disposer de l’image à réellement traiter

-

ouvrir INTI et traiter la première vidéo pour disposer de l’image moyenne montrant la raie Hélium en bord de champ

-

traiter la 2ème vidéo en mode "raie libre", utiliser "polynôme automatique" et ouvrir l’image moyenne calculée lors du premier traitement pour trouver le décalage

-

enfin utiliser un autre logiciel comme i-Spec pour faire la soustraction

Cette procédure, bien que documentée, est très intimidante pour les utilisateurs et explique que peu se soient lancés dans ce défi.

Dans JSol’Ex, la procédure est simplifiée au maximum :

-

on fait une capture "classique", intégrant la raie de référence (ex SoD2) et en utilisant un cropping assez large pour intégrer aussi la raie proche Hélium

-

on ouvre le fichier dans JSol’Ex : les images sont générées automatiquement, il n’y a rien à calculer, pas de soustraction de continuum, rien, juste un clic !

Ca ne prend que quelques secondes !

Le processus de simplification

ImageMath

Avant d’en arriver là, il a fallu de nombreuses améliorations, qui ont été livrées au fur et à mesure des versions. La première, c’est ce système de script que j’ai appelé "ImageMath" (le nom est inspiré de PixelMath dans PixInsight) : il permet d’utiliser des scripts pour produire des images que JSol’Ex ne produit pas tout seul. Cette avancée a permis d’écrire un script qui produit une image Hélium à partir d’un seul fichier SER :

[params]

# Entrer la valeur du décalage de raie

RaieHelium = -85

# Limites hautes et basses pour le continuum

ContinuumLo=-80

ContinuumHi=-70

# Stretch de l'image

Stretch=10

## Variables temporaires

[tmp]

continuum = max(range(ContinuumLo,ContinuumHi))

helium_raw = autocrop(img(RaieHelium) - continuum)

## Maintenant les images !

[outputs]

helium = asinh_stretch(helium_raw, blackPoint, Stretch)

helium_fixed = asinh_stretch(fix_banding(helium_raw;BandWidth;BandIterations),blackPoint, Stretch)

helium_color = colorize(helium_fixed, "Helium (D3)")Néanmoins, vous noterez que ce script nécessite toujours de déterminer le décalage de la raie Hélium, ainsi que la position du continuum. Ceci pouvait cependant être fait simplement avec le même fichier SER en l’ouvrant dans le "débogueur de raie", une procédure que je décrivais à l’époque dans une vidéo.

Cette procédure permettait ainsi d’obtenir une image Hélium en moins de 5 minutes, ce qui était déja une amélioration sensible par rapport à avant : plus besoin de faire 2 vidéos distinctes et un décalage de pixels calculé par le logiciel avec l’assistance de l’utilisateur.

Bien que ce soit une amélioration notable, on peut faire encore mieux.

Profils spectraux

JSol’Ex affiche depuis longtemps dans un onglet le "profil spectral" de l’image étudiée et peut aussi calculer la dispersion, mesurée en Angrstöms par pixel, d’une image. Ce profil correspond à l’intensité des raies pour un décalage de pixels donné. Depuis la version 2.3.0 cependant, j’ai intégré une fonctionnalité qui permet de détecter automatiquement la raie étudiée grâce à la comparaison de ce profil à un profil de référence (les données sont issues de la base de données BASS2000). Grâce à celà, il est désormais possible de savoir comment une image "s’aligne" entre le profil de référence et celui qu’on étudie. Puisque l’on connait à la fois la position de la raie de référence (Sodium D2 par exemple) et la dispersion, il est alors possible de calculer de combien de pixels on doit se déplacer pour trouver la raie Hélium !

Cette fonctionnalité est d’ailleurs disponible dans les scripts sous le nom de 'find_shift`.

Soustraction du continuum

A ce stade, nous disposons donc d’une image dont on sait qu’elle contient une raie de référence (Sodium D2 ou Fer Fe I) mais aussi le décalage en pixels de la raie Hélium. Il nous manque cependant la soustraction du continuum. Là encore, le script ci-dessus montre qu’il fallait entrer une valeur "à la main" pour trouver ce qu’il fallait soustraire. Une façon simple de procéder était encore une fois d’ouvrir le débogueur et de regarder les lignes plus claires dans le spectre : l’oeil étant assez sensible aux changements de contraste, il n’était pas trop compliqué de trouver un intervalle raisonnable pour le continuum.

Néanmoins, si on souhaite arriver à un traitement complètement automatique, on ne peut plus se baser sur une valeur "pifométrique". Une façon naïve de régler le problème aurait été d’utiliser un décalage fixe (par exemple 15 pixels). Cependant, ça ne fonctionne pas, pour plusieurs raisons :

-

le décalage dépend de la résolution (taille des pixels, dispersion)

-

on peut tomber sur une raie trop sombre, cette région du spectre étant assez contrastée

-

le résultat est très sensible à l’exposition

Pour cette raison, j’ai ajouté une fonctionnalité qui calcule un "continuum synthétique".

Il faut noter que contrairement à l’image "continuum" qui utilise un décalage fixe (et configurable) dans les traitements standards, ce continuum synthétique est utilisé uniquement dans le contexte du traitement Hélium, ou lors de l’utilisation de la fonction continuum() dans un script.

L’idée de cette fonction est de calculer une image qui représente au mieux le continuum à soustraire. Au lieu d’utiliser un seul décalage de pixels, on va effectuer un calcul à partir de plusieurs décalages (cette fonction nécessite donc plus de ressources lors du traitement). Ainsi la première chose à comprendre c’est qu’il ne s’agira pas d’une image à une longueur d’onde précise, mais bel et bien d’une image synthétique basée sur des propriétés statistiques.

En premier lieu, on effectue un échantillonnage des images à différents décalages de pixels, du minimum possible au maximum possible par rapport à la fenêtre de cropping du spectre et de la distorsion. Par exemple, si chaque trame fait 2000x200 pixels, on dispose potentiellement de 100 décalages entiers (la hauteur de l’image). Cependant, à cause de la distorsion, seuls un sous-ensemble nous permet d’avoir des lignes complètes lors de la reconstruction (disons, 180 lignes, qui donneront 180 images). Sur ces 180 décalages possible, nous n’allons, pour des raisons de performance, uniquement en retenir un échantillon (environ 1/3), ce qui nous donnera donc 60 images à étudier. Sur ces 60 images, on élimine celles qui correspondent à un décalage de pixel trop proche de la raie de référence puisque de manière évidente elles ne correspondent pas au continuum.

Dès lors commence l’analyse statistique : pour chacune de ces images, on calcule leur valeur moyenne. Ensuite, nous calculons la moyenne de ces moyennes et nous ne conservons que les images dont la moyenne est supérieure à cette moyenne.

A ce stade, il nous reste donc quelques candidates, dont la luminosité est suffisante pour être considérée comme le continuum, mais on dispose bien d’une liste d’images. La dernière étape consiste donc à calculer la médiane de toutes ces images, pour obtenir un et un seul "continuum synthétique".

Si on reprend les étapes, celà nous donne par exemple:

-

on dispose de 60 images à des décalages de pixels (entiers)

-

on retire les 8 qui sont au centre de la raie étudiée, il en reste 52

-

on calcule la valeur moyenne de chacune de ces images : 5000, 8000, 5200, 6400, …

-

on calcule la moyenne de toutes ces moyennes, par ex: 6000

-

on ne retient que les images dont la moyenne est supérieure à cette moyenne, disons qu’il en reste 30

-

on calcule la médiane de ces 30 images

Il ne nous reste donc plus qu’à faire la soustraction entre l’image au décalage de pixels de la raie Hélium avec le continuum synthétique calculé et on obtient l’image Hélium ci-dessus, en quelques secondes seulement !

Conclusion

Dans ce billet, j’ai décris la façon dont je procède pour améliorer JSol’Ex. En premier lieu, il s’agit d’identifier un besoin particulier, par exemple, ici, produire une image Hélium. A partir de ce besoin, il s’agit de chercher comment simplifier un tel traitement. La simplification ne se fait alors qu’à partir de ce dont je dispose à un instant t. Par exemple, dans un premier temps, il a s’agit d’ajouter un système de scripts, qui, outre le traitement des images Hélium, permet d’exploiter la richesse des données disponibles dans un scan. Les personnes intéressées peuvent en apprendre plus sur les scripts dans ce tutoriel.

Ensuite, il a s’agit d’ajouter des fonctionnalités permettant de simplifier la configuration pour les utilisateurs, en l’occurrence la détection automatique de raie, puis d’exploiter ces nouvelles fonctionnalités pour simplifier encore plus le traitement des images.

Ainsi, il s’agit d’une méthode très itérative, mais toujours dans le but de livrer des incréments fournissant une certaine valeur ajoutée aux utilisateurs. La bonne nouvelle, c’est que j’ai beaucoup d’autres idées en tête !

JSol’Ex 2.2.0 et amélioration du contraste

25 April 2024

Tags: solex jsolex solaire astronomie

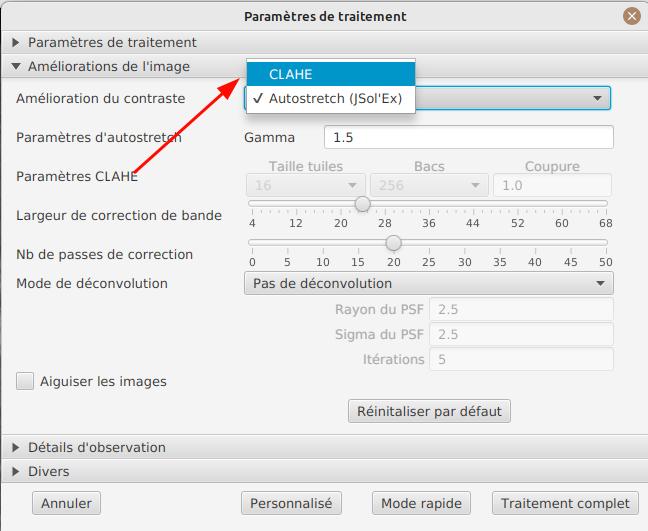

JSol’Ex 2.2.0 est tout juste sorti ! Dans cette version, j’ai particulièrement travaillé sur l’amélioration des contrastes. En effet, celles et ceux qui m’ont entendu parler de ce logiciel savent que je râle depuis longtemps sur mon algo d’amélioration de contraste. Dans JSol’Ex, il y a plusieurs algorithmes d’implémentés. Historiquement, le premier était une transformation sinus hyperbolique inverse, qui fonctionne relativement bien pour faire ressortir les zones plus sombres, mais éclaircit trop le reste. L’autre algorithme, utilisé aussi par INTI, c’est la normalisation locale d’histogramme (CLAHE).

Les problèmes avec CLAHE

CLAHE est un algorithme qui améliore sensiblement le contraste. Cependant, je n’ai jamais été très content du résultat. Je ne sais pas comment Valérie Desnoux fait dans INTI, qui a l’air de s’en sortir mieux, mais dans JSol’Ex les résultats obtenus sont assez moyens. En particulier, je note plusieurs défauts :

-

la création d’artéfacts, liés au fait que cet algorithme travaille sur des "tuiles" d’une taille donnée. Plus la taille de la tuile est élevée, moins l’amélioration de contraste est aggressive, mais plus les artéfacts sont visibles

-

sur l’image du soleil, les limbes sont toujours éclaircis, ce qui me mène au point suivant

-

les images solaires paraissent plus "plates" : le relief, en particulier l’obscurcissement lié à la sphéricité du soleil, disparaît. C’est aussi un effet mécanique de l’algorithme lui-même : plus la taille des tuiles est faible, moins on peut utiliser de valeurs distinctes. Ainsi pour une taille de tuile à 16 pixels, on aura au maximum 256 valeurs possibles (pour une image 16 bits avec 65536 valeurs à l’origine). Or, les meilleurs résultats, sur Sol’Ex, sont obtenus avec une taille de tuile de 8 pixels, soit une réduction à 64 valeurs possibles maximum.

-

il y a trop de paramètres possibles : la taille des tuiles, la dynamique et enfin le "facteur de coupe" pour l’égalisation. Sans entrer dans les détails, trouver une combinaison qui fonctionne bien indépendamment de la taille des images en entrée, de la dynamique de l’image et enfin du type d’image (H-alpha, calcium) est presque impossible.

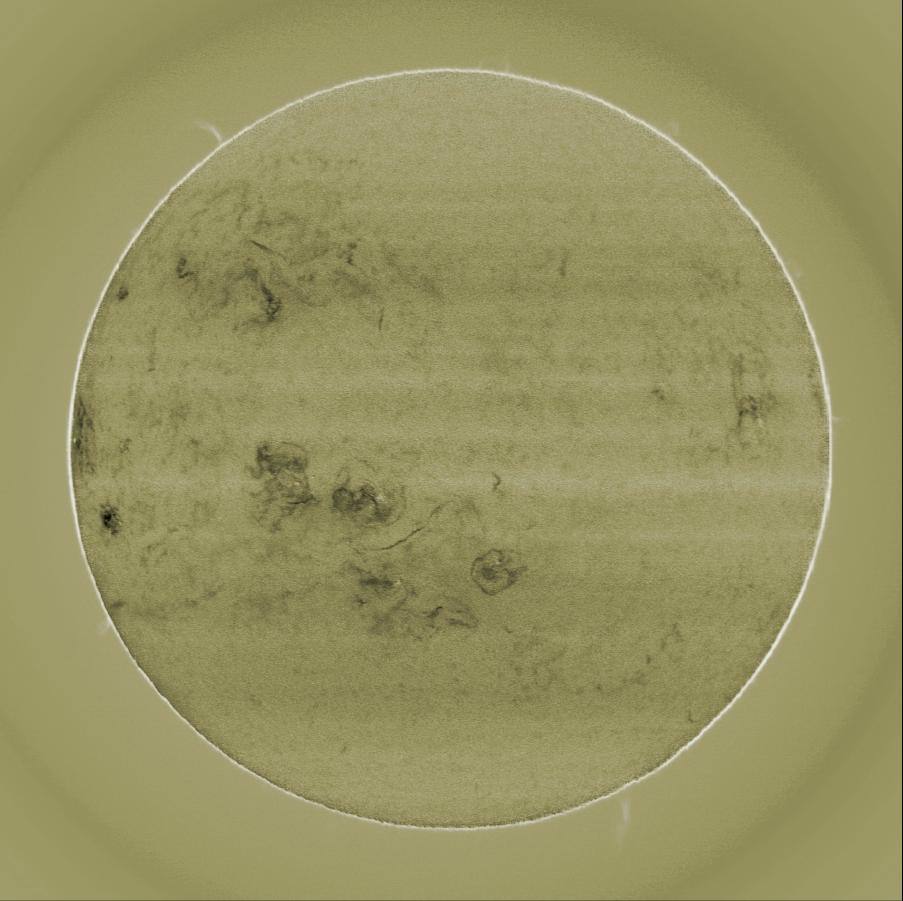

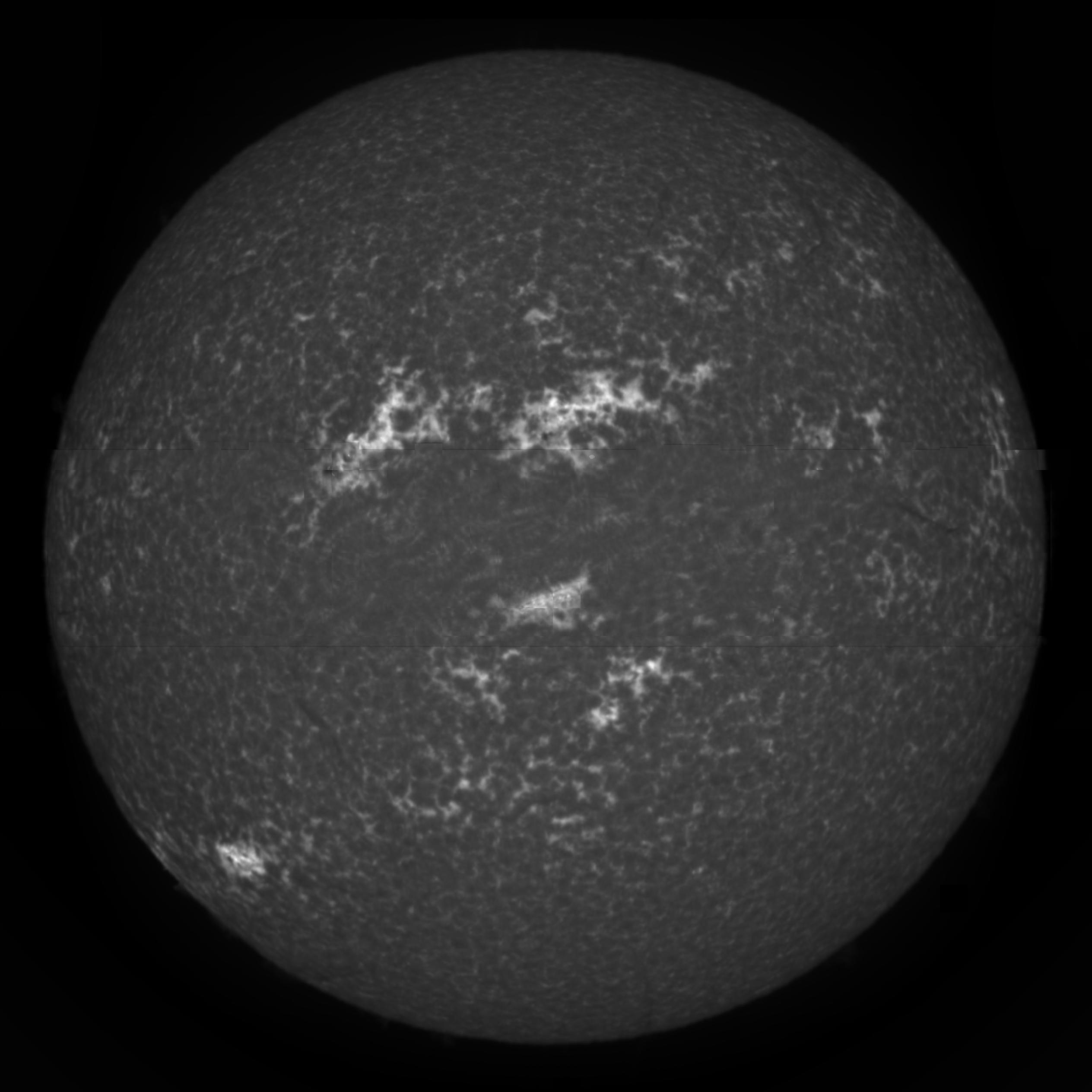

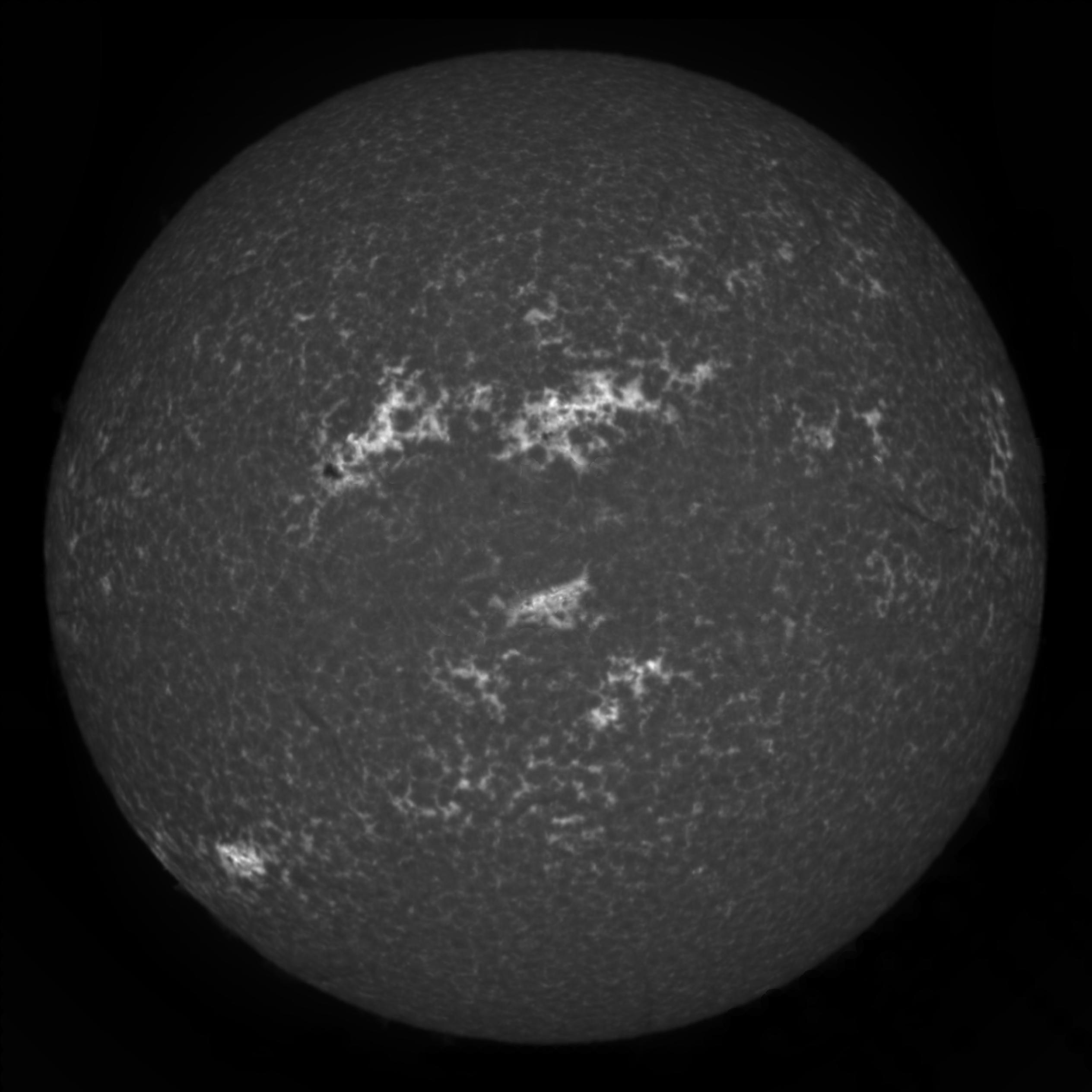

Pour illustrer mon propos, voici par exemple un disque solaire (le fichier SER est celui disponible sur le site de Christian Buil) traité par JSol’Ex 2.1 (version précédente donc), avec les paramètres par défaut, une tuile de taille 16 pixels (à gauche) ou 64 pixels (à droite) :

On note déja clairement éclaircissement des limbes, et des artéfacts "carrés" qui apparaissent dès que la taille des tuiles augmente. Trouver les bons paramètres qui conviennent à tous étant compliqué, JSol’Ex offrait déja la possibilité de changer ces paramètres (taille de tuile, intensité, …) mais je pense que peu d’utilisateurs le faisaient.

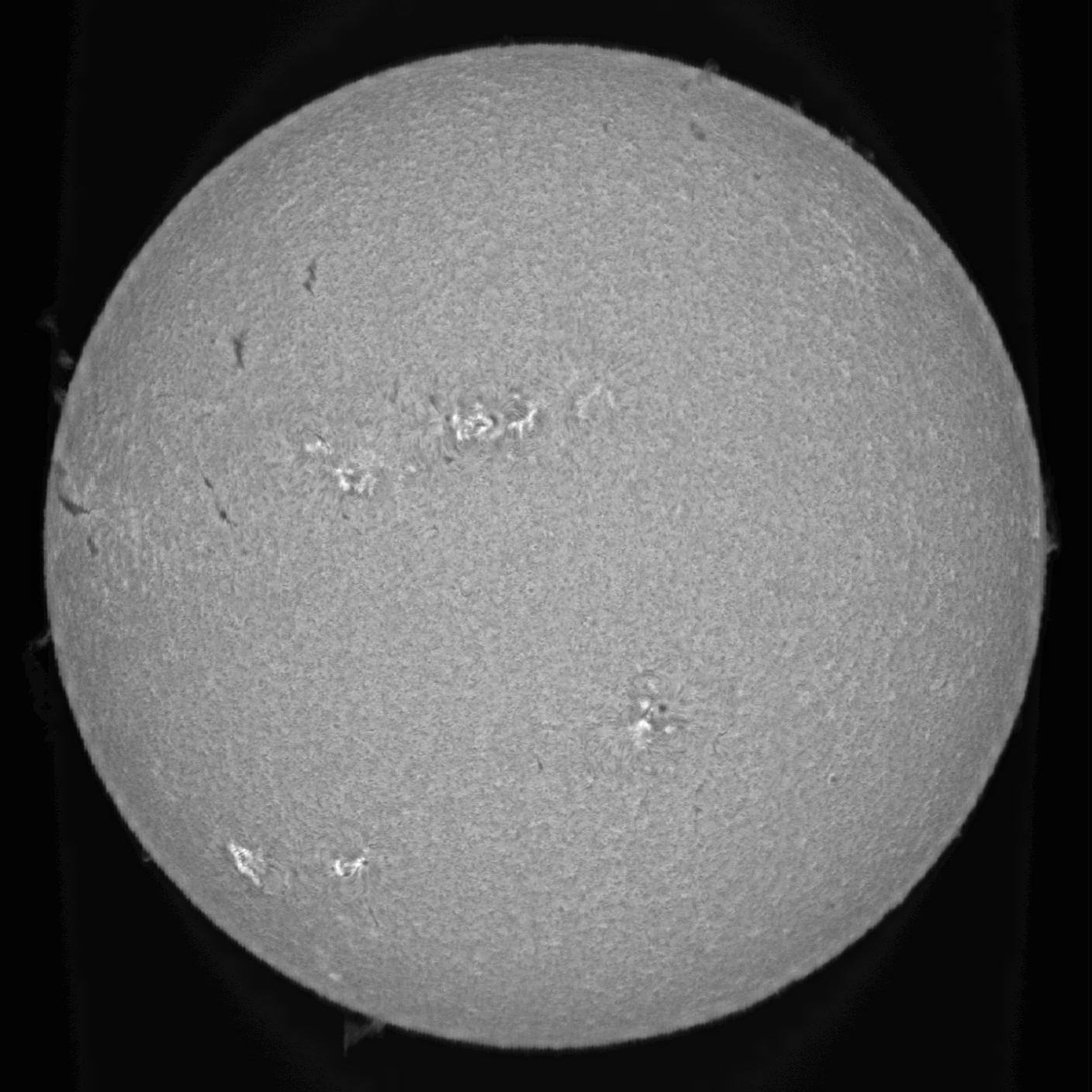

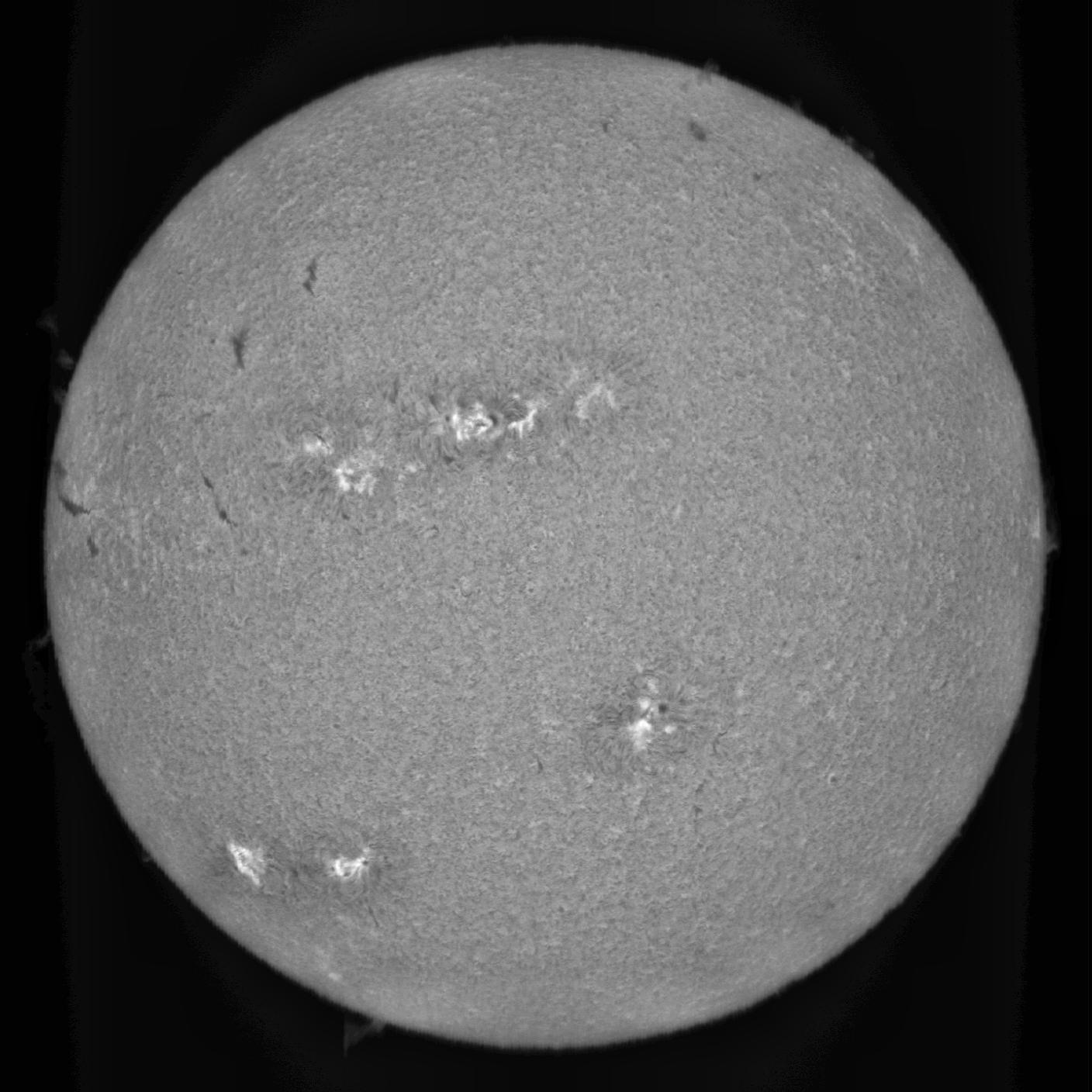

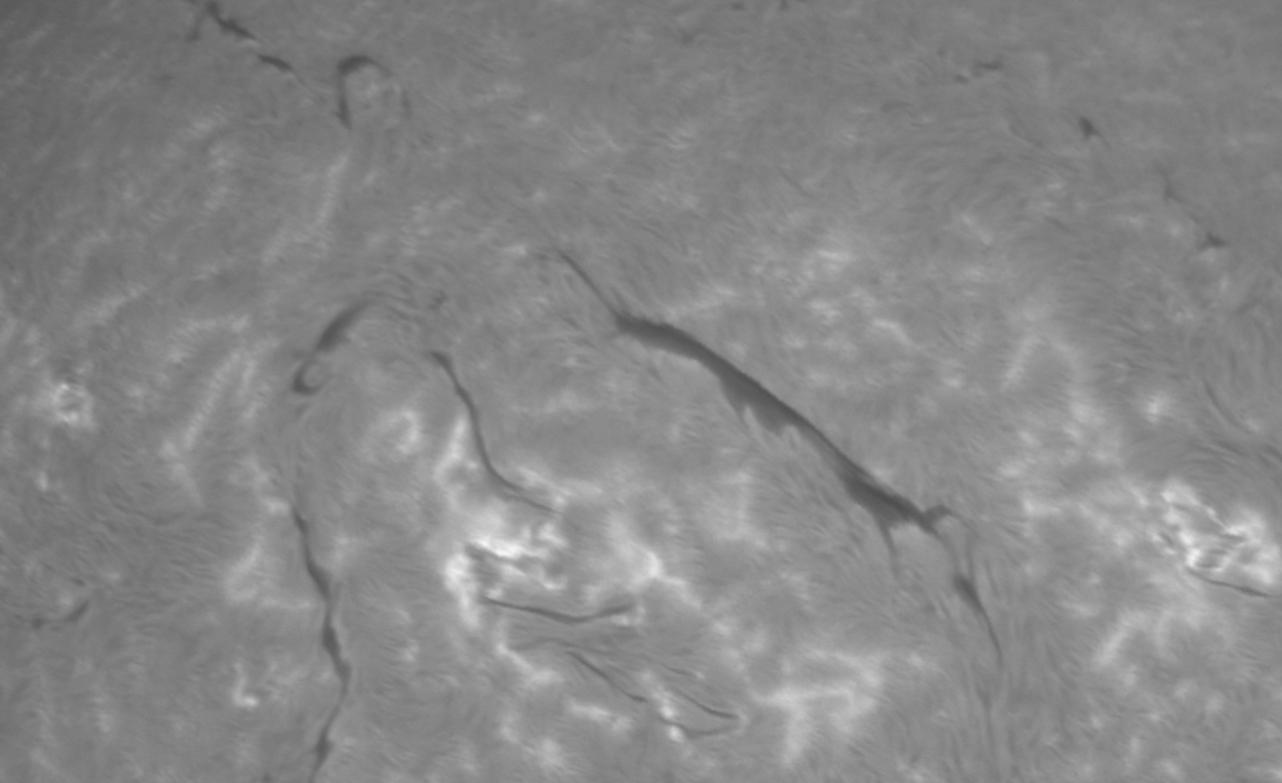

Aussi ais-je décidé d’ajouter un algorithme dans JSol’Ex 2.2 dédié aux images solaires. Pour vous donner un peu envie de lire la suite, voici ce qu’on peut obtenir avec JSol’Ex 2.2 sur la même image :

Nouvel algorithme

Le nouvel algorithme est disponible dans JSol’Ex 2.2 et est l’algorithme utilisé par défaut. Il combine plusieurs techniques, dont une correction du gamma avec masques, CLAHE et un stretching dynamique de l’image, pour produire une image plus digne de ce que Sol’Ex peut faire. Je ne prétends pas que cet algorithme est parfait, il a lui aussi des défauts, mais il semble être un bon compromis.

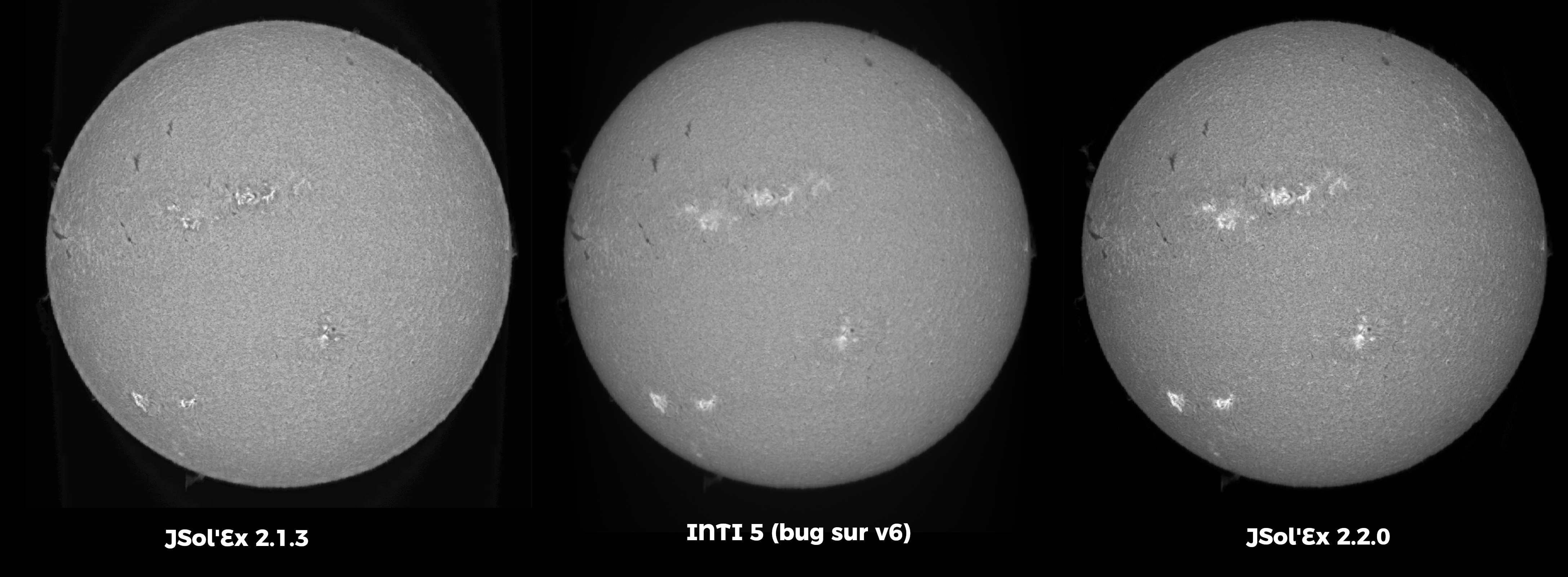

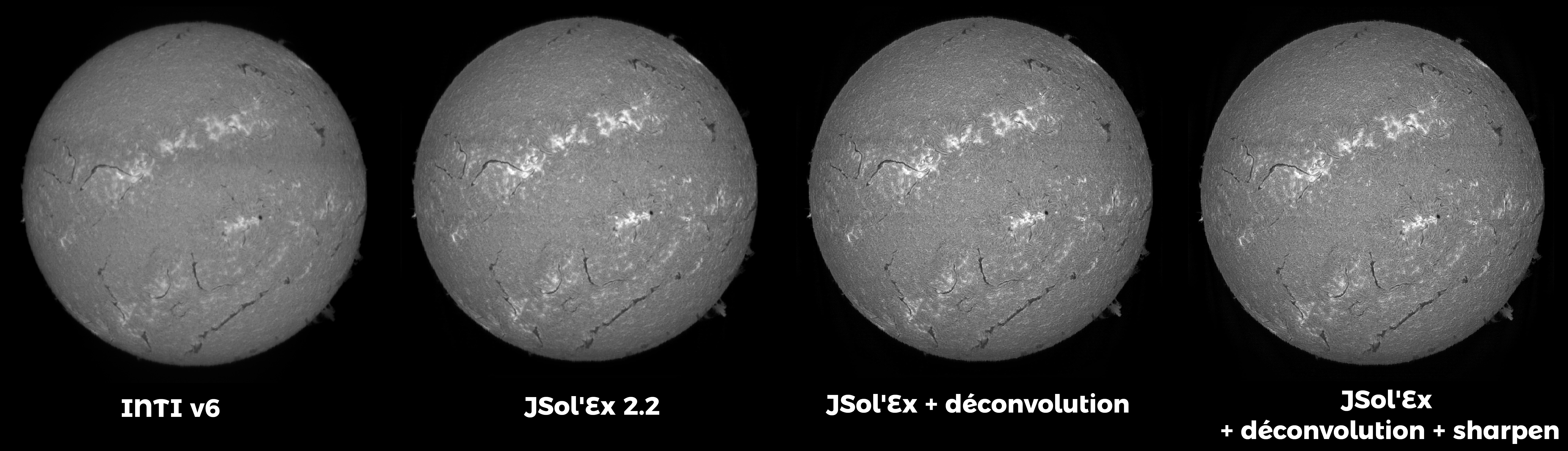

Afin de vérifier mes assertions, j’ai procédé méthodiquement à une comparaison des images produites avec les paramètres par défaut de INTI, JSol’Ex 2.1 et JSol’Ex 2.2 (la seule modification est l’autocrop activé et un retournement vertical pour que les images soient orientées de la même façon).

Commençons par la vidéo H-alpha de démonstration utilisée par Christian Buil sur son site.

Les images pouvant être difficiles à comparer en taille réduite, je vous invite à faire un clic doit et ouvrir l’image dans un nouvel onglet pour comparer.

On note clairement que sur JSol’Ex 2.1, il y avait certes une amélioration de contraste, mais elle se faisait au prix d’artéfacts et d’une perte de relief. En revanche, JSol’Ex 2.2 offre une image plus nette que celle d’INTI, sans avoir les défauts de CLAHE.

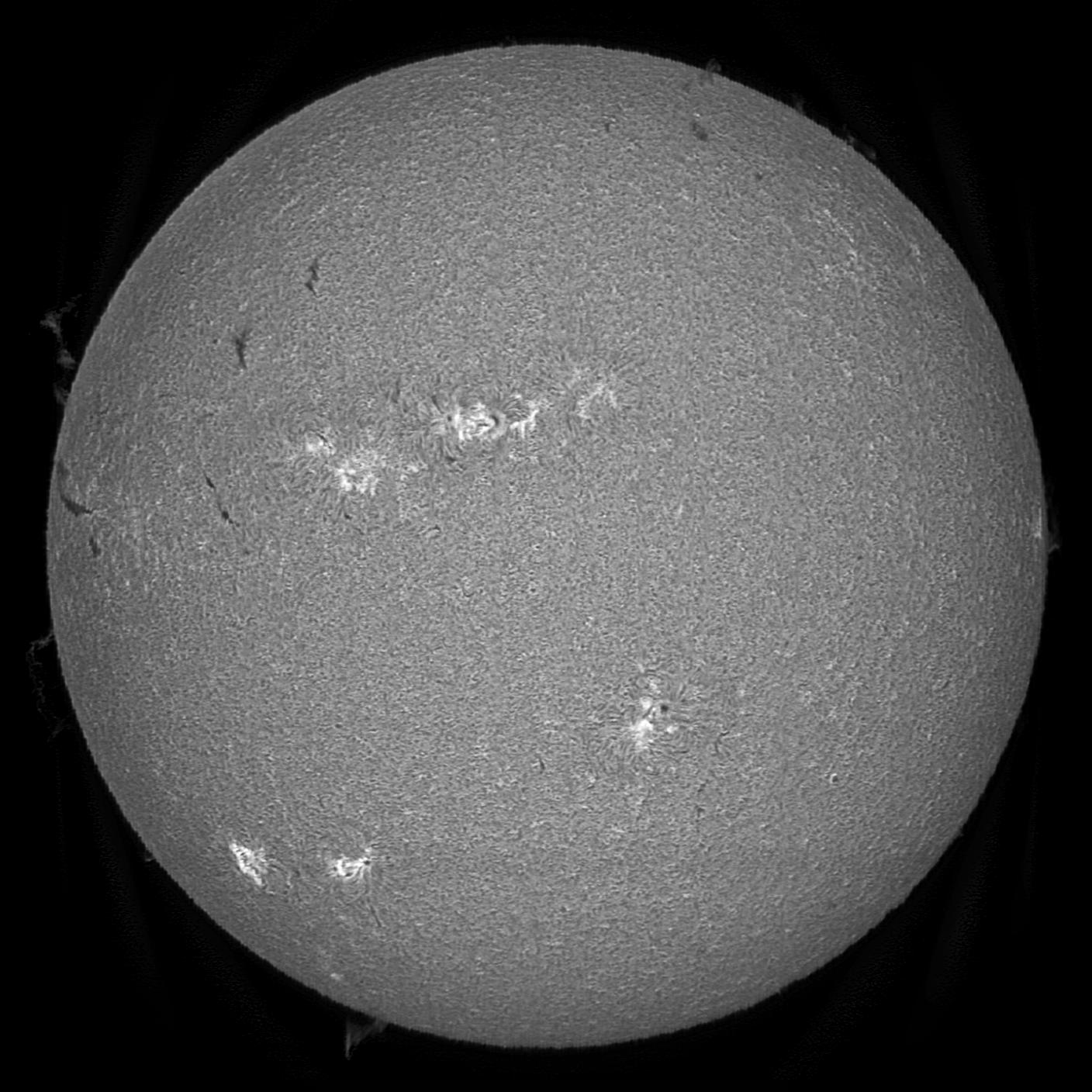

Continuons avec une autre image solaire en H-alpha, avec plus de dynamique :

Là encore on remarque que JSol’Ex 2.1 produisait une image raisonnable mais assez saturée et surtout "plate". La version 2.2.0, quant à elle, offre une meilleure dynamique tout en préservant les détails et cette impression de profondeur.

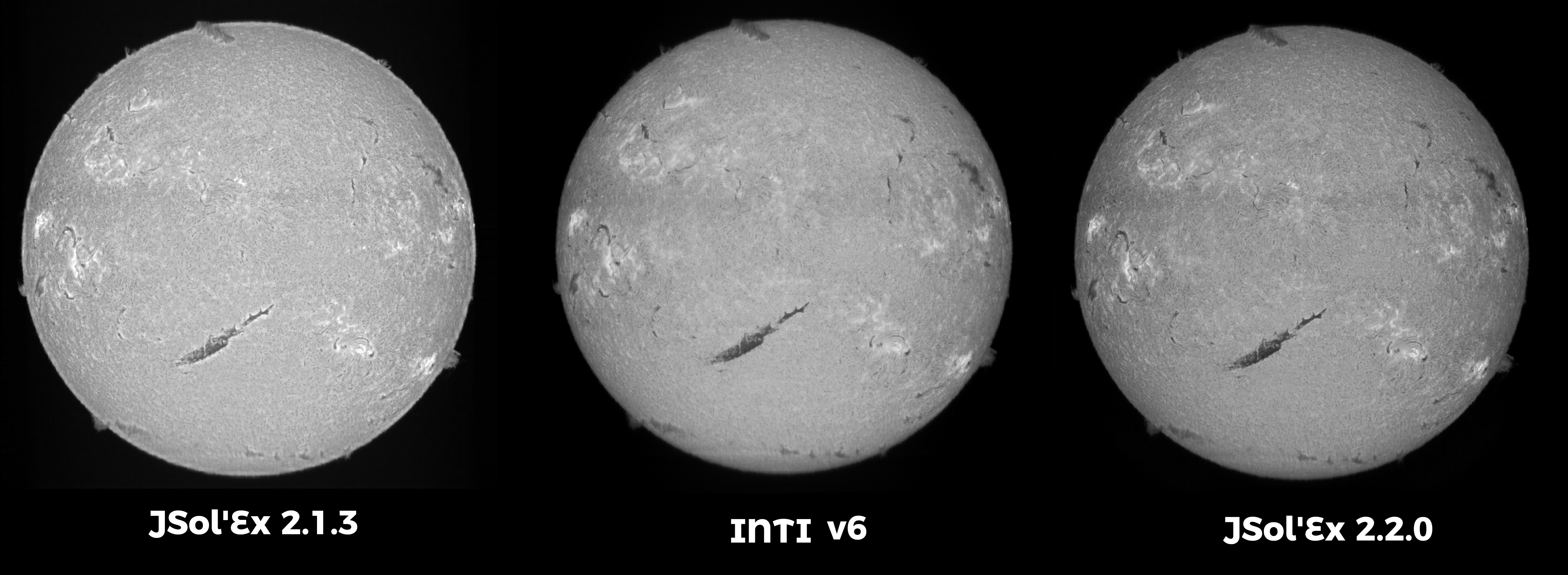

Nous pouvons aussi comparer les résultats sur une image obtenue avec une plus longue focale et un disque partiel :

Cette fois-ci on note que les 3 logiciels s’en tirent honorablement. Cependant, JSol’Ex 2.1.3 affiche une image moins contrastée que la v2.2.0, alors qu’INTI v6 affiche une image légèrement plus floue.

Malheureusement suite à une fausse manipulation, j’ai perdu la plupart de mes fichiers SER en raie calcium. J’ai cependant pu faire des comparaisons, sur des fichiers loins d’être idéaux. Les résultats sont cependant intéressants :

Cette fois-ci, on constate que JSol’Ex 2.1 s’en sortait plutôt bien. INTI là encore fait un travail remarquable, mais JSol’Ex 2.2 sature trop l’image. C’est un défaut que j’espère arriver à corriger, qui est lié au fait que les images calcium on un histogramme avec une gaussienne à base très large. Il est néanmoins possible d’atténuer la saturation en choisissant un facteur de correction moins fort (par exemple 1.1 au lieu de la valeur par défaut 1.5), ce que je vous encourage à faire pour les images Calcium (conserver le 1.5 pour le h-alpha, baisser pour le calcium) :

Le résultat dépendra cependant beaucoup de l’exposition initiale de votre vidéo. Voici un autre exemple en raie calcium K:

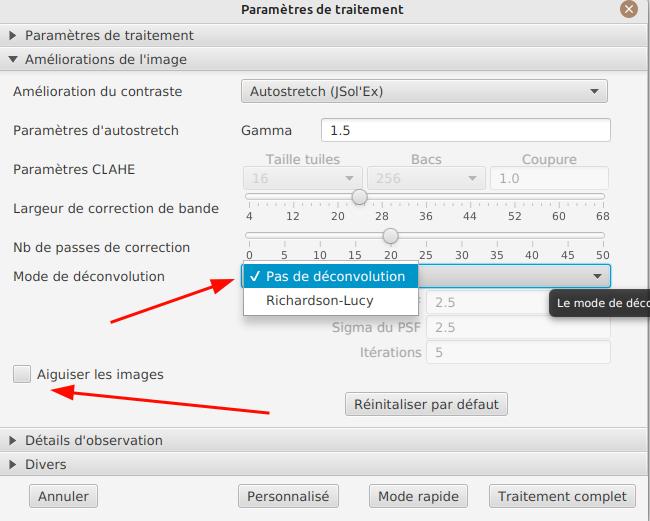

Il est utile de noter que si vous n’êtes pas satisfait du nouvel algorithme, il est tout à fait possible de repasser à CLAHE :

Enfin, il vous est tout à fait possible d’être plus ou moins "aggressif" sur l’amélioration de constraste à effectuer. Ansi, dans les paramètres de traitement, vous pouvez changer le facteur gamma qui permet d’assombrir l’image. Pour l’exemple, si on pousse les curseurs un peu loin (par exemple à 4 dans l’image suivante), vous constaterez que l’image reste exploitable, et surtout que les défauts de CLAHE qui a tendance à aplatir les images est complètement disparu :

Pour aller encore plus loin

Tout d’abord, JSol’Ex offre un langage de script qui permet d’aller bien plus loin dans les traitements, de générer automatiquement des animations, etc.

Bien sûr, les améliorations de JSol’Ex 2.2 sont disponibles dans les scripts par l’intermédiaire de 2 nouvelles fonctions : adjust_gamma qui permet de réaliser une simple correction de gamma sur l’image, et auto_constrast qui correspond à la correction décrite dans ce billet de blog.

Enfin, nous n’avons pas encore parlé des fonctionnalités désactivées par défaut, mais qui étaient déja disponibles dans JSol’Ex : la déconvolution et l’amélioration de la netteté. Ces deux options sont activables dans les paramètres de traitement :

Je ne recommande pas nécessairement de cocher la case "aiguiser les détails" si vous avez des images trop bruitées. Cette dernière ligne compare donc la même image, traitée avec INTI v6, JSol’Ex 2.2 (dernière version donc), mais sans déconvolution, avec déconvolution et finalement avec déconvolution et amélioration des détails :

En ce qui me concerne, je trouve la version déconvoluée particulièrement plaisante à l’oeil, et j’active donc systématiquement la déconvolution :

Conclusion

En conclusion, dans ce billet je vous ai présenté le nouvel algorithme de correction de contraste, activé par défaut dans JSol’Ex 2.2. Je pense que les résultats sont assez probants, et que JSol’Ex n’a plus à rougir de INTI en ce qui concerne les images avec amélioration de contraste. Cependant, je rappelle encore une fois que le logiciel officiel est INTI, que c’est le seul validé avec le sérieux de Christian Buil et Valérie Desnoux. Si vous voulez déposer des images sur BASS2000, vous devez utiliser INTI. Par ailleurs, je vous conseille toujours de comparer les résultats, ne considérez pas que JSol’Ex fait un meilleur travail, c’est probablement faux.

Par exemple, il reste des améliorations à faire sur les images calcium. Mais il est cependant tout à fait possible de modifier les paramètres par défaut, voire de changer d’algorithme.

N’oubliez pas non plus que JSo’Ex offre d’autres fonctionnalités comme la colorisation automatique des images, le stacking, la création de mosaïques solaires ou encore un langage de script particulièrement puissant, qui vous permet par exemple de générer des animations automatiquement. Je vais donc conclure ce billet avec un exemple de script qui utilise cette nouvelle amélioration de contraste pour produire une animation qui nous fait plonger dans l’atmosphère solaire en jouant sur le décalage de pixels :

# Décalage de pixels qu'on applique, de -15 à +15, pas de 0.5

images=range(-15,15,.5)

# On calcule une image corrigée de ses transversalliums

corrigee=fix_banding(images,32,40)

# Déconvolution

decon=rl_decon(corrigee)

# On redimensionne

redim=rescale_rel(autocrop2(decon,1.1),.5,.5)

# On applique la correction de contraste décrite dans ce billet

cst=auto_contrast(redim,1.5)

[outputs]

# Enfin on produit l'animation (75ms entre chaque frame)

animation=anim(cst,75)Bonus

J’en profite enfin pour partager deux vidéos, à destination des débutants sur Sol’Ex, que j’ai faites récemment :

Vers un effondrement civilisationnel ?

22 February 2024

Tags: ecologie climat politique civilisation

Coucher des mots sur le papier, aussi numérique soit-il, est une forme de thérapie : faire sortir ce qui doit sortir, organiser ses idées, prendre le temps de la réflexion, se rassurer parfois. Prédire le futur est bien évidemment impossible, mais il y a des ressentis, des signaux plus ou moins faibles, qui peuvent nous donner une idée de ce qui nous attend. Ce billet n’a aucune prétention scientifique, il s’agit plutôt d’une synthèse des nombreuses sources que j’ai lues ou visionnées sur le sujet au cours des dernières années. Au centre de ce texte, une interrogation : est-on en train d’assister à la fin de notre civilisation ? Après tout, l’Histoire est faite de disparitions de civilisations, pourquoi la nôtre survivrait plus qu’une autre ? En trame de fond, la question qui me préoccupe le plus : a-t-on déjà scellé le sort de nos enfants ? A qui s’adresse ce billet ? A ma famille et mes amis, probablement. À mes enfants, sûrement, pour exprimer mes regrets, mes craintes, mais aussi mon espoir envers eux. Enfin à tous celles et ceux qui devraient le lire mais qui ne le feront jamais, certainement.

La genèse

J’ai une sensibilité à la fois sur les sujets écologiques et scientifiques depuis ma jeunesse. Je me souviens encore des conversations avec mon copain Erwan, au collège. Nous arrivions tôt le matin, bien avant le début des cours, et avions entre autres de longues conversations sur la Terre et comment la sauver. A l’époque, nous ne parlions pas de réchauffement climatique : les sujets tournaient essentiellement autour de la pollution, du trou dans la couche d’ozone, de la disparition des papillons de nuit ou des ours polaires.

Dans ce terreau fertile aux idées dites écologistes, j’étais biberonné aux séries SF, et en particulier par Star Trek, série humaniste qui m’a profondément marqué. Il en est ressorti une double culture, à la fois la prise en compte de la sauvegarde de l’environnement, mais aussi un profond respect pour la science et ce qu’elle permet d’expliquer du monde. J’aime la rigueur de la démarche scientifique, ainsi que la capacité à se remettre en question, en opposition aux points de vue dogmatiques que l’on trouve par exemple dans les religions. Agnostique, j’ai cherché mes réponses dans la science et continue de le faire aujourd’hui, notamment en appliquant le principe du rasoir d’Okham.

J’avais déja sur ce blog abordé le sujet du rationnel et des reproches qu’on m’en a fait. Cette rationalité est aussi celle qui me pousse à écrire et partager mon angoisse : lorsqu’on a un "esprit scientifique" et que l’on a les informations dont on dispose aujourd’hui, il est difficile, voire impossible d’être optimiste. Pire, il est difficile d’imaginer autre chose qu’un effondrement pur et simple de notre société. Cette prise de conscience, ce pessimisme, doit se convertir en énergie, en action.

Précisons cependant que lorsque je parle d’effondrement civilisationnel, je me moque éperdument des fantasmes de l’extrême-droite sur le grand remplacement (culturel), de leur racisme, de leur xénophobie : je suis à l’opposé de tout cela, pour moi la "tradition chrétienne de la France" n’est qu’une vaste blague à l’échelle de l’Humanité et je suis de ceux qui pensent que l’égalité des droits n’est pas négociable, quelle que soit son origine, son orientation sexuelle ou politique.

Non, je parle ici d’un effondrement de notre mode de vie basé sur la consommation, la propriété privée et la mondialisation : je parle de la disparition possible de la démocratie et du retour de la guerre et des horreurs qui vont avec.

De quoi parle-t-on ?

Une fois que vous savez ce qui se passe au niveau climatique, il n’y a que trois options possibles : le renoncement, le cynisme ou l’action. Pour mes enfants, j’ai choisi d’agir : c’est notamment pour cela que je me suis lancé en politique, certes à un niveau local puisque limité aux municipales, il y a quelques années. C’est aussi pour celà que je communique beaucoup sur les sujets écologique et politique, quitte à en agacer quelques uns.

Une des premières choses dont il faut prendre conscience, c’est l’urgence climatique. Je discute souvent du réchauffement avec des personnes sensibilisées au sujet, mais paradoxalement, rares sont ceux qui ont vraiment conscience de l’urgence de la situation. Au contraire, on entend dire que les écolos sont pénibles, le RN va même jusqu’à affirmer que le GIEC exagère. Rappelons que le GIEC est un organe international qui synthétise les conclusions de centaines d’études et que de part la nature extrêmement politique de ses rapports, c’est précisément plutôt l’inverse qui se produit, avec des rapports qui proposent différents scénarios, les politiques préférant regarder les plus optimistes.

Récemment, Jean-Marc Jancovici, spécialiste mondial du sujet, inventeur du concept de "bilan carbone" était auditionné au Sénat devant des élus parfois médusés par ses réponses.

1.5 degrés, c’est mort. 2 degrés, sauf chute de comète ou effondrement économique, c’est parti pour être mort.

Audition au Sénat du 12 Février 2024

Pourtant, aucune des affirmations de Jancovici, dont je me sens proche idéologiquement au moins sur ces sujets, ne devraient être surprenantes : on le sait depuis longtemps, les rapports s’enchaînent, mais l’inaction persiste.

Une grosse partie du problème est qu’il est difficile pour un être humain, de quantifier par nos simples sens une augmentation de 2 degrés. Aujourd’hui encore, une canicule est systématiquement illustrée par des vacanciers à la plage, là où on devrait montrer des ruisseaux asséchés, des forêts qui partent en fumée, des personnes âgées en détresse, des agriculteurs qui ne peuvent plus arroser leurs cultures.

Il est important de comprendre que 2 degrés, c’est une moyenne mondiale : le réchauffement est plus important dans certaines zones de la planète (les pôles par exemple) et moins dans d’autres. Une différence de 2 degrés à l’échelle planétaire, c’est 3 ou 4 degrés en plus en France (en 2022, nous avons atteint +2.7⁰) Entre -1.8 et -18 degrés, c’est précisément ce qui séparait l’ère glacière de l’ère pré-industrielle. A l’époque, nous avions des glaciers de plusieurs centaines de mètres de haut, l’Angleterre était reliée au continent (les océans étaient 120 mètres plus bas). Si une si petite différence de température pouvait provoquer une telle conséquence, imaginez ce que 2 ou 3 degrés en plus pourraient donner. Précisément, on a du mal à s’imaginer, et c’est pour celà que le GIEC établit des scénarios, des prédictions. Ainsi, il est plutôt rassurant que la France se prépare à un scénario à +4⁰.

Pourtant, lorsque la France est touchée par une "vague de froid" (je le mets entre guillemets parce que ces vagues sont moins fréquentes et moins fortes qu’il y a 20 ans), nous retrouvons ces réflexes climatosceptiques, en confondant météo (temps court) et climat (temps long).

Aujourd’hui nous sommes, mondialement, au niveau de la barre des 1.5 degrés et les conséquences au niveau local se font déja sentir :

-

en Espagne, des sécheresses à répétition ont annihilé les capacités agricoles du pays. Le verger de l’Europe va disparaître. Le sud de la France commence à souffrir des mêmes déréglements.

-

en Catalogne, cette même sécheresse menace l’approvisionnement en eau potable de millions d’habitants

-

en France, des événements climatiques de plus en plus extrêmes menacent populations et industries

A 1.5 degrés, nous avons déja scellé le destin de pays entiers. Les îles Tuvalu, par exemple, vont disparaître. Ce pays est le premier du monde à avoir signé un accord d’asile climatique avec l’Australie. Imaginez-vous votre pays entier disparaître ? Ceci nous semble lointain, pourtant c’est la réalité que sont en train de vivre des millions de personnes dans le monde : leur existence même est menacée. Que feriez-vous si vous ne pouviez, physiquement, plus vivre en France ? Laissez-moi penser que les obsessions migratoires de politiciens et autres consultants du bar du coin sont d’un tel ridicule comparé à ce qui nous attend…

Nous sommes en avance sur les 1.5 degrés prévus par le GIEC: pour la première fois, les +1.5⁰ ont été dépassés pendant 12 mois consécutifs.

A 2 degrés, nous savons déjà que la sécurité alimentaire ne sera plus assurée, que des conflits liés aux migrations climatiques vont éclater, pour l’accès aux ressources et pour justement empêcher les migrations de personnes qui cherchent simplement à survivre. Fantasme ? Regardez par exemple ce qui se passe entre l’Iran et l’Afghanistan pour l’accès à l’eau potable.

A 2.5 degrés, certaines régions du monde, en particulier en zone tropicale, deviennent inhabitables. Les rassuristes avancent que l’homme a toujours su s’adapter, mais il existe cependant des limites physiologiques, la réalité scientifique s’impose à tous. Au-delà d’un certain seuil, la combinaison de la température et de l’humidité ambiante rend inefficace le mécanisme de transpiration. Nous mourrons, il ne s’agit pas d’une opinion, mais d’une réalité devenue tristement célèbre l’été dernier lors d’un concert de Taylor Swift.

De la difficulté des ordres de grandeur

Un des problèmes majeurs du réchauffement climatique est qu’il est, même en étant sensible au sujet, difficile de se rendre compte des ordres de grandeur. J’étais par exemple déjà sensibilisé au sujet, mais, malgré tout, je continuais à prendre l’avion en particulier pour mon travail. Je savais que l’avion était mauvais pour le climat, mais je n’avais aucune idée d’à quel point : on sait que c’est mauvais, mais rares sont ceux qui nous donnent les ordres de grandeur. On se retrouve ainsi avec des politiques qui nous incitent à éteindre nos appareils en veille mais nous proposent de faire un aller-retour à Rome pour 100€ ou moins par avion ! Des non-sens écologiques, mais pas économiques : un aller-retour Paris-Dubaï par exemple, c’est l’équivalent de votre budget carbone annuel selon les accords de Paris ! Des outils existent désormais pour évaluer votre impact et des activistes comme Bon Pote font un excellent travail pour décrire les problèmes inhérents à l’industrie aéronautique.

Habitué des conférences à l’international pour mon travail, ma prise de conscience sur ce sujet particulier fut aussi tardive que brutale. J’en ai ai encore honte. J’ai cependant pris la décision, voici un peu plus de 4 ans, de ne plus prendre l’avion et de n’assister qu’aux conférences auxquelles je peux me rendre par train. C’est pourtant un vœux pieux, puisque je reste un être humain : que ferais-je lorsque mon entreprise me donnera le choix entre prendre la porte ou donner une conférence à San Francisco, ou une réunion de travail au Maroc ? Ce problème est aussi sujet de tensions familiales, entre les vœux légitimes de "voyager", d’explorer le monde et ses merveilles, et mon obstination à dire non. Combien de temps résisterais-je à cette pression ? Une bonne partie de ma carrière s’est construite sur le fait de rencontrer des experts de mon domaine à l’étranger, mais aussi à partager mes connaissances : je suis le fruit du réchauffement climatique. Quelle légitimité ai-je à vous "faire la morale" et vous demander, à vous, d’y renoncer ? Qui suis-je pour refuser ce que j’ai moi-même fait ?

Je n’ai pas de bonne réponse à cette question, si ce n’est de faire appel à votre sensibilité. D’autre part, je ne serai pas de ceux qui vous jugent pour vos choix : je suis le premier plein de contradictions et nous faisons, chacun, nos choix en fonction de ce que nous savons à un instant T, de nos contraintes, et finalement de nos convictions. Pour certaines décisions, nous avons le choix et certaines décisions seront plus faciles à prendre pour vous que pour moi, et inversement !

Il est bien plus facile pour un citadin de se séparer de son SUV que pour quelqu’un qui n’a pas accès aux transports au commun en zone rurale. Inversement, manger local et faire travailler producteurs locaux est plus simple pour un rural qu’un citadin. Chacun de nous, à notre échelle, faisons des choix qui nous semblent opportuns compte-tenu de nos situations personnelles.

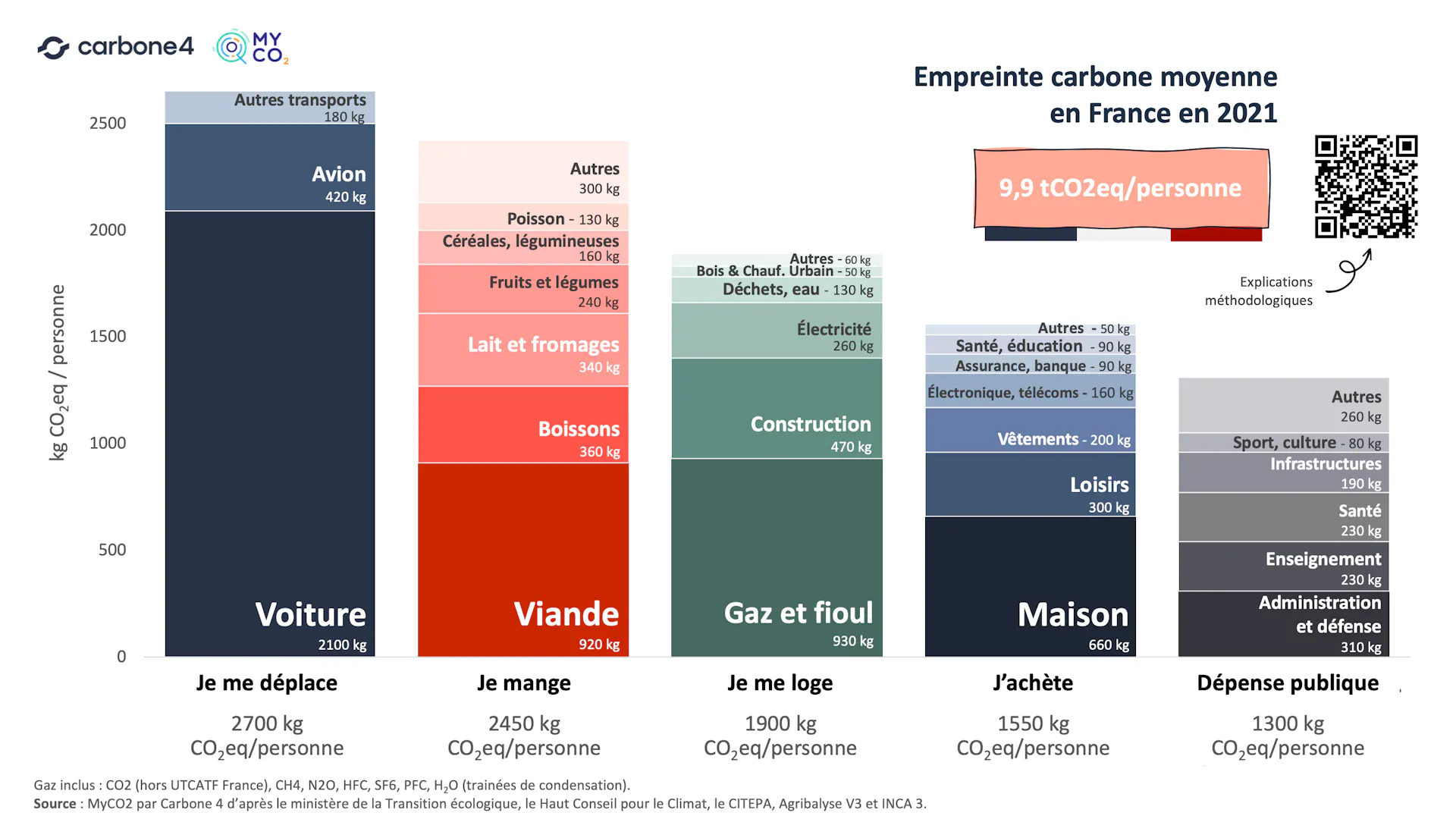

Prenons donc ces ordres de grandeur en exemple. Pour respecter les accords de Paris et limiter le réchauffement climatique à 2 degrés, notre empreinte carbone, par personne, doit tomber à 2 tonnes par an. A ce jour, les estimations varient, mais l’empreinte carbone d’un français est de l’ordre de 10 tonnes par an.

En France, 31% des émissions sont liées au transport, donc à la voiture individuelle et aux transports de marchandises, c’est dire si le sujet de la voiture est important. Pourtant, que constate-t-on ? L’automobile est le 2d plus gros contributeur à la publicité en France, avec environ 1500€ de budget communication par voiture. Notre Président aime la bagnole et envoie un message désastreux. Bruno Le Maire, devant trouver 10 milliards d’économies, choisit de le faire sur l’environnement et les mobilités. En clair, on nous vend des voitures au lieu de nous vendre des vélos électriques, dont l’empreinte carbone est 20 fois inférieure.

Ce site montre l’évolution de l’empreinte carbone par tête et par pays, depuis le début de l’ère industrielle. Il y a 2 choses importantes à comprendre :

-

l’empreinte carbone est directement corrélée au niveau de vie des habitants. Plus on est riche, plus on consomme. Plus on consomme, plus notre empreinte est forte. L’empreinte carbone est 10 à 50 fois plus forte dans les pays industrialisés qu’en Afrique. Ainsi, il est complètement faux d’affirmer, comme Nicolas Sarkozy, que la crise démographique est responsable de la crise climatique. Ça n’est pas le nombre de personnes qui compte, mais leur capacité à consommer. Diminuer la population serait donc une solution… à condition de le faire dans les pays développés !

-

l’empreinte carbone est essentiellement impactée par la consommation d’énergies fossiles (pétrole et charbon). C’est ce qui explique que l’empreinte carbone d’un français est plus faible que celle d’un allemand ou d’un polonais : là où ils utilisent des centrales à charbon pour se chauffer et faire tourner leurs industries, nous avons des centrales nucléaires.

Sur ce sujet du nucléaire, soyons clairs : je suis écolo et pour. Lorsqu’on connaît ces ordres de grandeurs, lorsqu’on sait qu’environ 240 000 personnes meurent en Europe tous les ans à cause de la pollution atmosphérique principalement liée à la combustion de charbon, la position écologique anti-nucléaire traditionnelle est difficilement tenable. Cette position anti-nucléaire, je l’avais de part de mes lectures lorsque j’étais gamin (Pif’Gadget, Greenpeace, des positions essentiellement liées à la peur du nucléaire, des accidents et de leurs terribles conséquences. Ça, c’était avant d’avoir pris le temps d’étudier le sujet. J’ai depuis largement révisé ma position et suis convaincu qu’on ne pourra pas s’en sortir sans nucléaire. Il d’ailleurs assez ironique de constater que la biodiversité a fortement augmenté à Tchernobyl, depuis que la zone n’est plus occupée, de quoi être optimiste sur la résilience des écosystèmes si nous devions disparaître !

Ceci ne veut pas dire qu’on ne peut pas développer les énergies renouvelables, bien au contraire, mais il faut encore une fois avoir les ordres de grandeur en tête. Vous trouverez des chiffres différents selon les estimations plus ou moins pessimistes (notamment le facteur de charge), mais il faut comprendre que pour remplacer 1 seul réacteur nucléaire, il faut environ 1000 éoliennes ou 55 km² de panneaux solaires. Nous avons 56 réacteurs en France… Sans réduire notre consommation électrique, ceci signifie que nous devons exacerber encore plus la concurrence pour l’occupation des sols : doit-on s’en servir pour produire de la nourriture, de l’énergie, du logement ou la laisser libre pour la biodiversité ?

Est-il dès lors si surprenant que l’Allemagne ait dù raser des villages entiers pour exploiter le charbon nécessaire à la remise en marche de ses centrales ? Une bombe climatique ! Doit-on ensuite s’étonner que ces exemples soient repris par les réactionnaires pour décrédibiliser toute action de protection de l’environnement ?

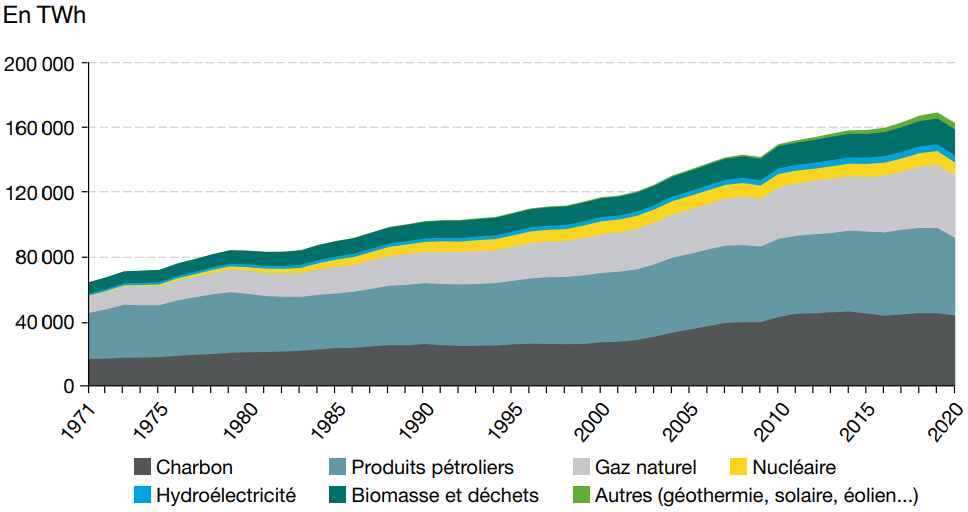

Voilà le noeud du problème : notre société moderne est bâtie sur la consommation massive d’énergie, en particulier du pétrole, et à moins de réduire drastiquement cette consommation, nous ne pourrons tenir nos engagements. A l’heure actuelle, il faudrait réduire de 5% par an notre consommation d’énergies fossiles pour y arriver. Cette consommation étant directement corrélée à la sacro-sainte croissance, il s’agit ni plus ni moins que d’avoir l’équivalent d’un COVID, tous les ans ! Nos politiques ont déja renoncé, personne n’étant prêt aux sacrifices que celà implique.

|

Note

|

On m’a posé la question de la chaîne d’approvisionnement d’uranium, qui peut être problématique. C’est vrai, mais uniquement si nous restons à cette génération de réacteurs. La 4ème génération, les surgénérateurs sont capables d’utiliser les produits dérivés de la fission et nous donnent suffisamment de ressources pour des siècles de consommation. Pourtant, ce sont tous les expérimentateurs qui ont été mis à l’arrêt (Superphénix ou Astrid). Un gâchis dénoncé par Yves Bréchet lors de son audition au Sénat. |

De sacrifices, pourtant, c’est de cela qu’il s’agit. Sacrifier nos générations futures, ou sacrifier notre mode de vie. Nous vivons toujours selon le mythe d’une croissance infinie : toute notre société est bâtie sur ce seul concept économique qui n’a aucun sens physique. La réalité physique des choses est pourtant implacable : il n’existe pas de croissance infinie dans un monde fini. Là encore, il est indispensable de parler d’ordres de grandeur : une croissance à 2% signifie un doublement de la valeur tous les 36 ans. Qui dit valeur dit production, dit consommation d’énergie, dit impact sur l’environnement.

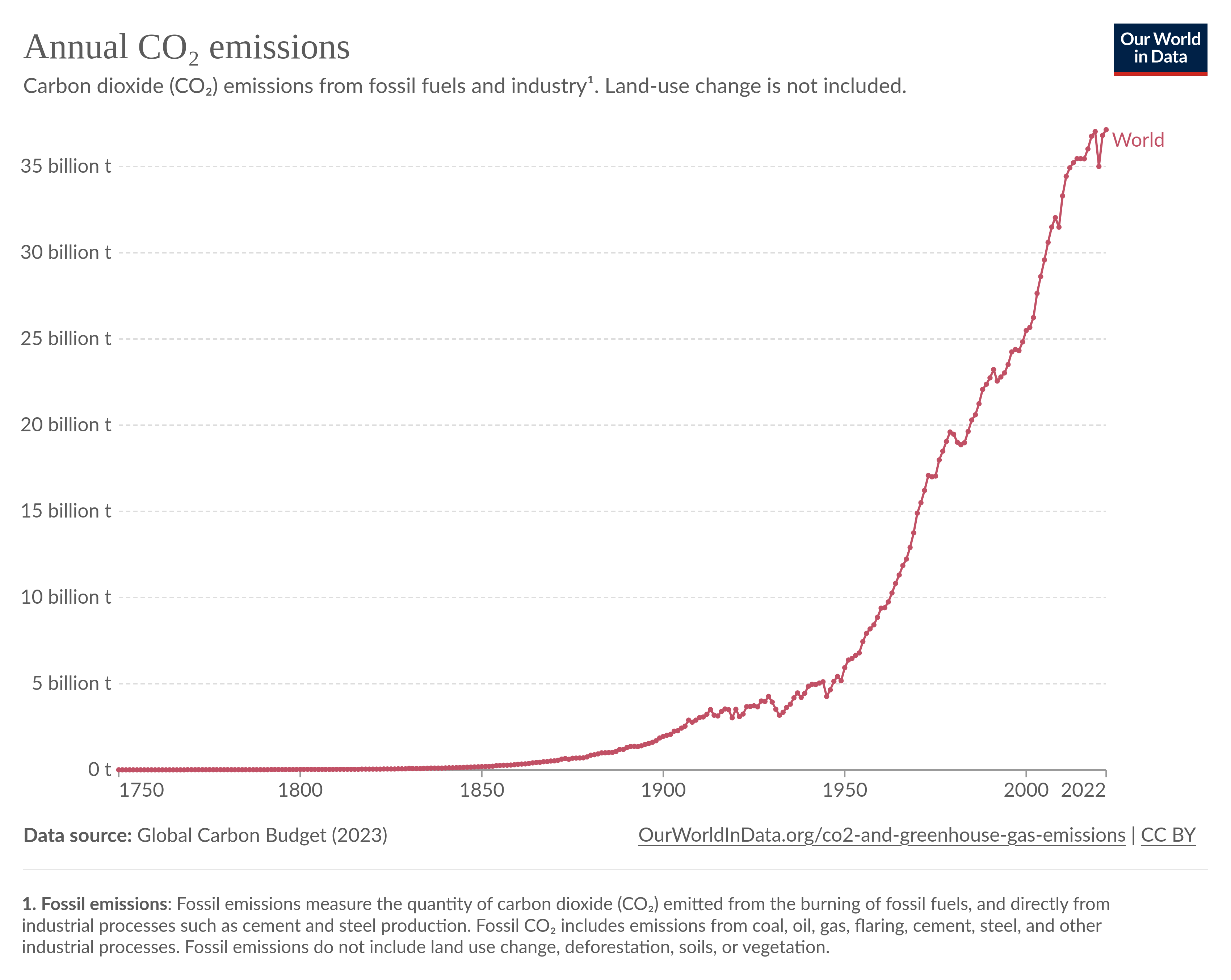

Dit autrement, la croissance est une courbe exponentielle : il s’agit du genre de courbes que l’on ne souhaite pas voir.

N’est-il pas inquiétant de constater que dans ce graphique, des crises majeures comme les chocs pétroliers des années 70 ou le COVID n’ont eu que peu ou pas d’influence sur notre consommation ?

Une autre visualisation de la notion d’exponentielle, c’est celle des anomalies de température, produite par la NASA. Une animation que je trouve particulièrement efficace pour comprendre l’effet d’emballement :

Cette exponentielle explique pourquoi le développement des énergies renouvelables ne s’est pas faite au détriment des énergies fossiles : elle s’est faite essentiellement en complément, parce que nous avons toujours besoin de plus d’énergie pour maintenir cette croissance.

Ainsi, si la consommation de charbon dans la production énergétique mondiale baisse en pourcentage, le volume n’a jamais été aussi élevé ! Une mauvaise nouvelle à cependant relativiser, puisque la production de charbon baisse : en effet, dans des pays tels que l’Allemagne, les renouvelables sont destinés à remplacer les centrales à charbon.

Le problème des courbes comme celles-ci est que les prévisions deviennent presque impossible à formuler, on entre dans le domaine de l’inconnu, et les esprits scientifiques comme moi n’aiment pas cela. Serge Zaka, docteur en agroclimatologie, décrit des phénomènes de réchauffement des océans statistiquement impossibles (autrement dit, les marges dépassent ce que la statistique classique considère comme possible).

Cette question de la croissance, pourtant, est au cœur de notre survie. Attention, je ne parle pas de la survie de l’espèce, ni même de "sauver la planète". En effet, même en étant pessimiste, je pense que l’espèce humaine survivra. En revanche, ne comptez pas sur moi pour vous dire combien survivront : mon intuition, compte-tenu des paramètres dont nous parlons ici, est que nous risquons de voir une nouvelle crise majeure. Et quand bien même l’espèce humaine ne survivrait pas, la vie, elle, continuera sans nous : elle existait avant, elle existera après.

C’est l’histoire d’un skieur qui se répétait "jusqu’ici tout va bien"

Pour prendre une analogie, nous sommes un peu dans la situation d’un skieur du dimanche : il descend sa piste, il à se sent à l’aise. Il accélère, prend plaisir, jusqu’à se rendre compte qu’il a pris trop de vitesse, que la pente est bien trop raide et qu’il ne peut plus maîtriser sa course. En bas, un croisement, d’autres skieurs, il est trop tard pour s’arrêter et il n’y a que 2 solutions :

-

virer, se laisser tomber, quitte à se casser une jambe, pour éviter le groupe

-

continuer et entrer en collision, en entraînant de nombreuses autres personnes dans la chute et potentiellement de nombreuses victimes

M’est avis que beaucoup de personnes choisissent instinctivement la 2ème solution, parce que la première n’est pas confortable et que notre instinct de préservation nous joue des tours : incapable d’anticiper des conséquences encore pires à long terme, notre espèce se mure dans le déni et choisit la solution la moins logique. Pire, notre tendance naturelle est, souvent paradoxalement de bonne foi, de déresponsabiliser. Les exemples sont nombreux : mettre des radars automatiques au lieu d’apprendre à respecter les limites, demander aux enfants de mettre des gilets jaunes au lieu de sécuriser les routes, dire aux filles de se couvrir au lieu de demander aux garçons de cesser de les voir comme des objets sexuels, demander d’éteindre vos box internet au lieu de prendre votre vélo pour aller chercher du pain…

Rassurisme et techno-solutionnisme

Maintenant que le réchauffement climatique est palpable et subi par les plus sceptiques d’entre nous, un nouveau mal est en marche : le rassurisme. Se substituant au climato-scepticisme, il consiste à affirmer que nous nous en sortirons toujours, que l’homme s’est toujours adapté, que nous trouverons des solutions techniques, et cætera, et cætera. Le rassurisme est une plaie parce qu'il incite à l’inaction. Agréable discours à entendre, il est le parfait compagnon du status quo, sous couvert de discours "de bon sens", mais aussi un risque encore plus grand pour l’avenir. Je classe dans le rassurisme le discours techno-solutioniste que nous entendons de plus en plus.

Prenons par exemple l’idée souvent commentée de la séquestration du carbone : pourquoi ne pourrions-nous pas l’extraire de l’atmosphère ? A priori, l’idée n’est pas idiote, si on peut le faire il serait idiot de s’en passer. Pourtant, il suffit d’un tout petit peu de recherche pour se rendre compte des problèmes. Lorsqu’on parle de concentrations de CO², nous parlons de parties par million. Par exemple, 200ppm, ce sont 200 molécules de CO² sur 1 million : c’est une concentration extrêmement faible, mais qui a des effets dévastateurs. Si vous avez quelques souvenirs de physique, je vous conseille d’ailleurs cette excellente vidéo de Science Etonnante sur les mécanismes du réchauffement et le mythe de la saturation du réchauffement. Si tant est que nous disposions d’une technologie de "nettoyage", il faudrait brasser une quantité d’air phénoménale pour l’extraire et pour brasser cet air, il faudrait une quantité non négligeable d’énergie… En clair, il s’agit donc souvent plus de "coups de com'" d’entreprises cherchant avant tout à faire de l’argent sur le dos du climat, peu recommandables… mais pour lesquels des investisseurs peuvent se laisser séduire.

Un autre exemple, c’est la la colonisation de Mars, annoncée par Elon Musk. Peut-on être sérieux seulement 5 minutes ? Il n’est pas surprenant que les seuls qui y croient soient des économistes, c’est avant tout le modèle de la fuite en avant : puisqu’on ne peut pas sauver notre planète, rendons-en une autre vivable ! L’idée est d’autant plus ridicule qu’on ne sait même pas contrôler le climat ici, en premier lieu lutter contre le réchauffement climatique, alors que dire lorsque l’on parle de changer le climat d’une planète dont on ne sait presque rien… C’est une chose que de vouloir fouler Mars du pied, s’en est une autre que de la terraformer.

Mais un des problèmes de la croissance et de l’évolution de la technologie et de l’information, c’est qu’elle rend possible des actions individuelles aux conséquences potentiellement catastrophiques, au premier rang desquelles la géoingénierie. Ainsi, un article particulièrement inquiétant montre que nous disposons de moyens de diffuser économiquement du sulfure dans l’atmosphère, pour refroidir l’atmosphère'. Même avec des conséquences terrifiantes, telles que des pluies acides, ou le simple fait qu’arrêter d’en diffuser entraînerait un réchauffement massif encore plus grand, le fait est que ça pourrait fonctionner en pratique. Nous sommes donc en plein dans ce que j’expliquais plus haut : plutôt que de résoudre le problème à sa source, on continue, quitte à avoir des conséquences bien pires plus tard. Lorsqu’une technologie est disponible, elle est utilisée. La question n’est donc pas de savoir SI, mais QUAND elle sera utilisée, soit par un riche milliardaire qui souhaite continuer à faire du profit en dépit du bon sens, ou d’un Etat qui lutte pour sa survie. Si vous vous rappelez de l’horloge de l’apocalypse, il me semble qu’on se rapproche dangereusement de minuit…

L’autre effet pervers du techno-solutionnisme, c’est que si tant est qu’il fonctionne, il n’incite pas à la sobriété. Ainsi, toutes les évolutions technologiques qui ont permis de faire des économies n’ont in fine pas eu pour conséquence de réduire la consommation. Par exemple, les moteurs thermiques sont aujourd’hui beaucoup plus efficaces qu’avant, mais les économies ont servi à augmenter l’autonomie ou à avoir des voitures plus grosses : c’est l’effet rebond.

Ainsi, toutes ces évolutions technologiques ne sont en pratique là que pour supporter un modèle de consommation constant. Un autre exemple ? Le rendement à l’hectare de la production agricole a été multiplié 3 depuis 1960, par plus de 10 depuis le début de l’ère industrielle :

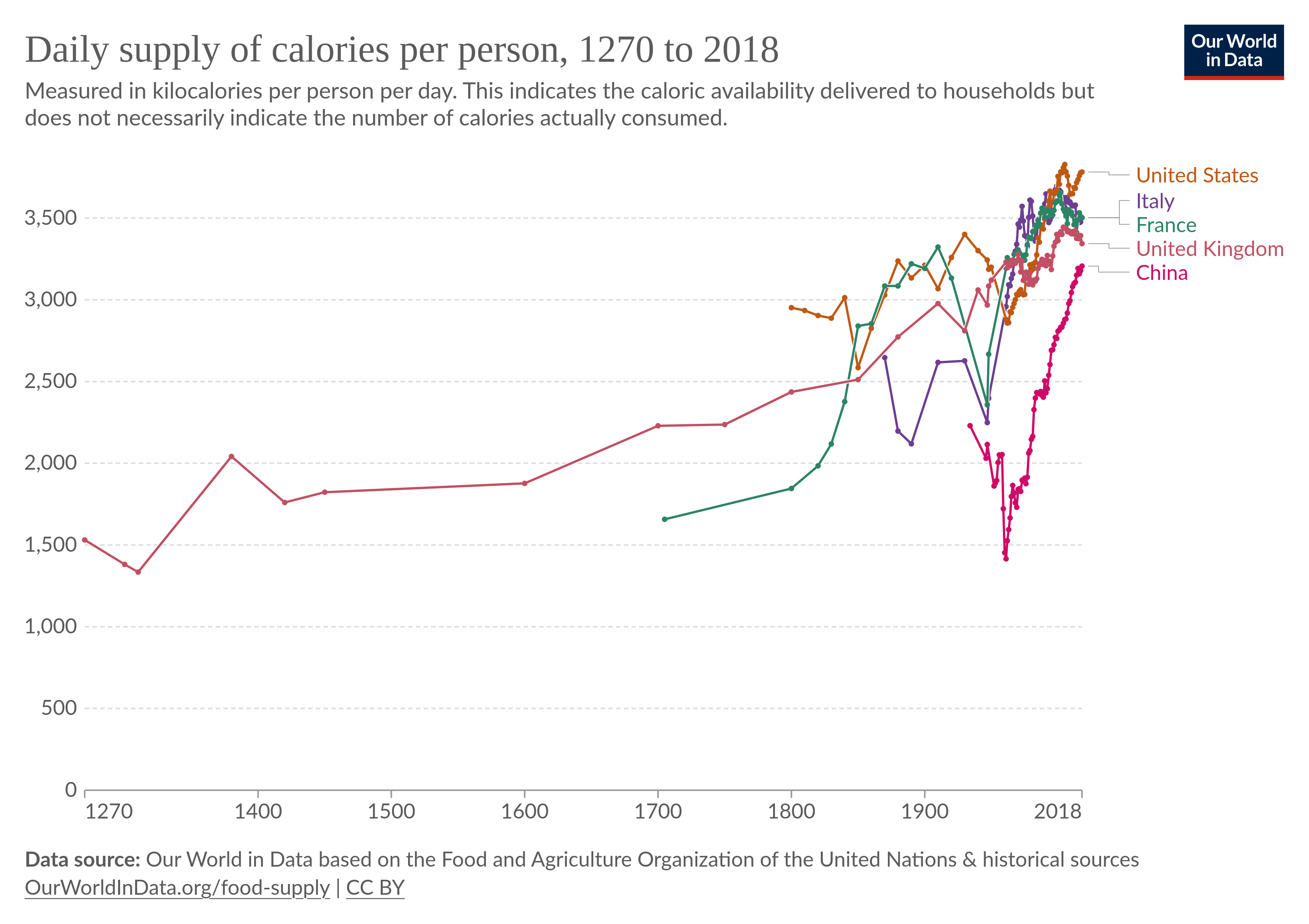

On se rend bien compte du mensonge qui consiste à dire qu’il faut bien continuer ce modèle pour nourrir la planète : nous pourrions utiliser les gains de productivité à l’hectare pour nourrir plus de monde mais nous avons choisi de les investir dans des biocarburants pour faire rouler des voitures, ou dans de la production industrielle de produits transformés qui menacent notre santé. Pour autant, les revenus des agriculteurs ne cessent de chuter, les famines n’ont pas disparu (essentiellement pour des questions de logistique) et les maladies cardiovasculaires explosent. Nous produisons largement plus que ce que dont nous avons besoin pour survivre, mais nous "produisons de la croissance" en transformant les produits. Là où au début du siècle, l’essentiel de la consommation se faisait du produit brut au consommateur, désormais l’essentiel se fait par des produits transformés. L’abondance est illustrée par ce graphique représentant l’apport calorique par habitant :

A celles et ceux qui répondront que plus d’apport calorique c’est une meilleure santé, rappelons que nous avons besoin d’entre 2000 et 2500 calories par jour, un seuil qui a été franchi au début des années 1820. Depuis, nous sommes bien au-delà, ce qui explique notamment l’explosion de l’obésité et des maladies cardiovasculaires (en combinaison avec la sédentarité permise par l’exploitation des machines).

Ainsi, la croissance n’est pas nécessairement synonyme de progrès : au delà d’un certain seuil, elle devient maladive et entraîne plus de maux que de bien.

Les prémisses de cette constatation ne datent pas d’hier, le club de Rome s’en faisait écho il y a 50 ans déja. De nos jours, rares encore sont les économistes qui défendent la décroissance. En France, des chercheurs comme Timothée Parrique montrent avec brio que la décroissance ne peut plus être considérée comme un gros mot. Au contraire, elle devient nécessaire, comme le laisse entendre ce titre "ralentir ou périr".

Il ne faut pas non plus confondre le techno-solutionnisme avec l’utilisation des techniques permettant de limiter l’impact de notre consommation. Certains outils seront indispensables, mais si nous devons répondre à une solution d’urgence, il est préférable de le faire avec les technologies dont on dispose, pas de celles dont on ne sait pas si elles seront disponibles dans 10 ans.

Pourquoi un effondrement ?

Nous l’avons vu, la conjoncture n’est pas favorable, loin de là. Nous savons, nos gouvernements savent, mais rien ne change. N’était-ce pas Emmanuel Macron qui nous a promis que son quinquennat "sera écologique ou ne sera pas" ? Nous avons la réponse : après le une convention citoyenne sur le Climat vidée de sa substance, après le sacrifice de l’écologie au profit des agroindustriels qui nous confortent dans ce modèle, il n’y a a que du cynisme dans les décisions politiques. Même au niveau local, dans ma commune, la majorité se gausse à coups de croissance verte, un concept qui ne parle qu’aux économistes et qui n’a jamais démontré le moindre succès. Encore une fois, il n’y a rien de surprenant : un niveau de base en mathématiques ou de physique suffit à comprendre qu’on ne peut faire de croissance sans sacrifier de ressources, ce qui se traduit soit par de la pollution, soit par du réchauffement climatique. Dans ma commune, on construit encore des supermarchés en périphérie comme dans les années 70 et on moque les écologistes "décroissants", "contre l’emploi" et "pour l’insécurité". Qu’importe l’état catastrophique des cours d’eau, que l’eau doivent être importée de Loire-Atlantique pour subvenir aux besoins d’industries agroalimentaires locales bien connues, puisqu’on a la croissance ! Qu’importe que l’on doive construire sur des terres agricoles pour loger tous les néo-ruraux attirés par la croissance économique du territoire…

Nous constatons là que malgré toutes les informations disponibles, nous sommes dans la situation du skieur qui ne peut plus s’arrêter; il y aura des dégâts !

Cependant, pas de quoi prophétiser un effondrement civilisationnel, me direz-vous. Certes, mais il y a plus : c’est la conjonction de facteurs qui peut entraîner notre chute.

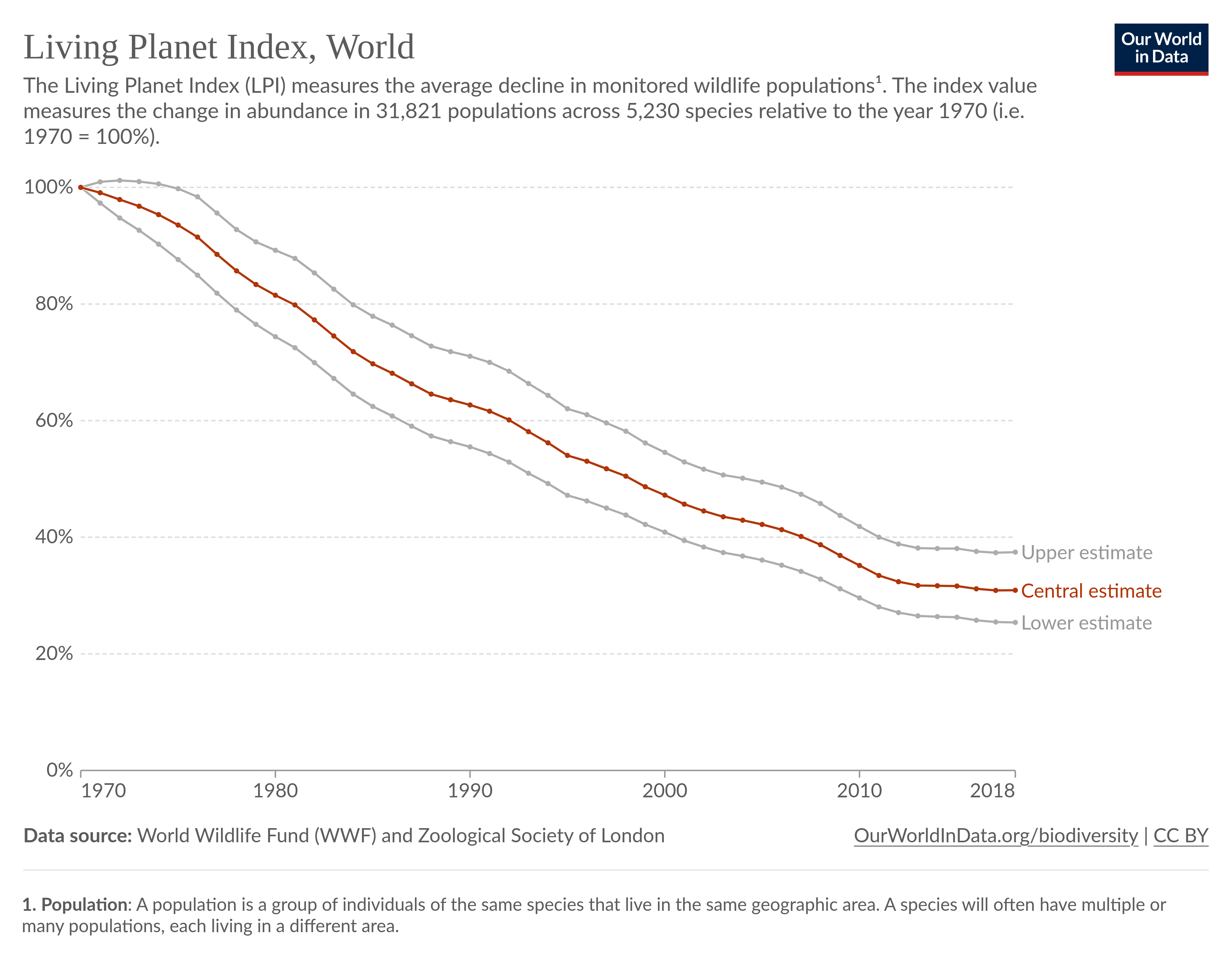

Nous avons mentionné que dans le dérèglement climatique, l’essentiel du problème était concentré autour des énergies fossiles. Cependant, d’autres problèmes d’ampleur sont eux aussi liés à notre modèle de développement : l’effondrement de la biodiversité par exemple. Nous sommes entrés dans une nouvelle phase d’extinction de masse, tellement bien documentée qu’elle porte un nom: l’extinction de l’Holocène :

En 50 ans, plus de la moitié du monde sauvage a disparu. Je ne sais pas si vous vous rendez bien compte : l’homme est présent sur Terre depuis plus de 4 millions d’années, Homo Sapiens depuis 300000 ans environ, et en seulement 50 ans, nous avons détruit plus de la moitié des espèces. Pour la biodiversité marine, la situation est encore pire, avec la surpêche, l’augmentation de la température et l’acidification des océans. Depuis plus de 6 mois, nous vivons une véritable canicule marine, mais qui en a entendu parler ?

Or, l’homme fait partie d’un écosystème : nous vantons notre adaptabilité, mais nous sommes les premiers dépendants de notre environnement. Le détruire, c’est directement menacer notre survie : il faut être fou pour croire que l’homme peut survivre seul.

Devant l’opposition à la décroissance, ou même simplement les appels à la sobriété, les discours les plus réactionnaires sont en marche. Il n’y a pas de quoi être optimiste, lorsqu’en France, un ministre traite des militants écologistes d’éco-terroristes, mais en même temps, soutient des agro-industriels qui mettent le feu à des mutuelles… Là encore on pourrait croire à de l’ignorance, mais il s’agit d’un cynisme sans nom : tous savent pertinemment ce qui se profile, mais aucun n’a le courage politique pour faire ce qui est vraiment nécessaire : un nouveau modèle de société basé sur la sobriété. Que dire d’un pays où l’on s’émeut plus facilement d’une boîte de soupe versée sur un tableau protégé derrière une vitre en verre, que de plusieurs centaines de morts en Inde suite à la canicule… Pas vraiment de quoi être optimiste.

On pourrait s’arrêter là mais d’autres signaux sont tout aussi inquiétants. Je rappelais par exemple à quel point notre société moderne est basée sur les énergies fossiles. Le graphique ci-dessous montre par exemple la répartition de la consommation énergétique par filière :

La baisse en 2020 est liée au COVID, mais ne nous y trompons pas : à l’échelle mondiale, les énergies fossiles dominent : les renouvelables représentent une part croissante de la production, mais ne sont comparables qu’au parc nucléaire, les énergies fossiles sont largement dominantes. Dans ce portrait, la situation du pétrole est plus critique : nous savons que les réserves s’épuisent. Que l’on décide de s’en passer volontairement ou non, nous arriverons avant la fin du siècle à la fin du pétrole.

Or, dans de nombreux domaines, nous sommes complètement dépendants du pétrole, non pas en tant que source d’énergie, mais de matière première :

-

pharmacologie

-

agriculture (machines, mais aussi engrais)

-

santé

-

textiles

-

cosmétiques

-

…

Il ne vous aura pas échappé que l’Europe ne dispose pas ou peu de cette ressource. Préférons-nous continuer à brûler ce qui nous reste pour voyager pour 500€ à l’autre bout du monde, ou pour produire notre nourriture et fabriquer nos médicaments ? Mon choix est vite fait…

A court ou moyen terme, la dépendance de l’Europe au pétrole signifiera un asservissement aux pays qui en disposent (si tant est qu’ils soient disposés à nous en vendre). Sachant que ces pays ne sont pas ce qu’il y a de plus démocratique, la question du respect des droits humains pourrait à moyen terme devenir un vague souvenir. Dès lors quels choix s’offriront à nous ? Entrer en guerre pour leur "voler" ces ressources ? Les forcer à nous en vendre ? A quel prix ? Comment ?

Le remplacement du pétrole en tant que source d’énergie est possible mais requiert une électrification massive de nos moyens de subsistance (transports, machines outils, industries, …) et une relocalisation de la production, le transport maritime dépendant essentiellement de cette ressource. Or, qui dit électrification dit augmentation de la production. De quels moyens disposons nous pour remplacer une telle quantité d’énergie ? L’écologie n’est jamais aussi mauvaise que lorsqu’elle ignore la réalité physique des choses.

Les pays qui disposent de ces ressources, donc, ne sont pas particulièrement amicaux. Nous parlons de pays dont les actions récentes n’augurent pas d’un avenir radieux pour l’Europe, mais aussi pour leurs propres populations : la Russie, la Chine, … La Russie, qui ne cesse d’étendre son influence sur un continent Africain avide de "revanche" sur la colonisation et l’exploitation de leurs ressources par l’Occident. Certes la Russie n’est pas animée par la bonté de réparer les erreurs de l’Occident, elle souhaite tout autant s’accaparer les ressources minières du continent et par là donc disposer de moyens de pression… pour gagner sa "guerre civilisationnelle", mais le message est passé, la France doit s’en aller !

Cette guerre civilisationnelle, des gens comme moi y participent malgré eux. Ceux qui me connaissent savent à quel point, notamment, je lutte contre mon envie de quitter mon métier. A vrai dire, si je n’avais ni famille, ni crédits sur le dos (comme tout le monde), je crois que j’aurais déjà abandonné ce qui est pourtant une de mes passions.

En effet, en tant que développeur, non seulement je contribue massivement au réchauffement climatique (le numérique représente une part de la consommation d’énergie mondiale en explosion, notamment à cause de l’explosion du nombre de terminaux), mais j’ai aussi donné des outils de manipulation de masse, utilisés comme tels, par des puissances qui cherchent à nous déstabiliser. Les réseaux sociaux, notamment, sont devenus de véritables poubelles où les idées complotistes, antivax, climatosceptiques sont promues bien plus que les autres. Les idées dites "de droite" sont favorisées par les algorithmes de Twitter/X et les manipulations de la Russie déstabilisent nos démocraties. Savoir que ce que je développe sert à l’effondrement de la société et à la propagation des idées les plus nauséabondes me rend malade.

Force est de constater que le populisme monte en flèche : il a gagné au Brésil (Bolsonaro), aux Etats-Unis (Trump), au Royaume-Uni (Jonhson), en Hongrie (Orban), aux Pays-Bas (Wilders), en Argentine (Milei). Il faut être sacrément optimiste pour croire que la France puisse miraculeusement échapper à ce fléau. Pour autant, une fois en place, nous savons ce que ces gouvernements pensent de l’économie et donc de l’écologie. Pire, nous savons ce que ces personnes pensent du droit des femmes et plus largement de toute personne ne pensant pas comme eux. Je vous invite d’ailleurs à écouter cette interview de Véra Nikolski et Jancovici sur la fin de l’ère du pétrole et ses conséquences sur la démocratie et le droit des femmes.

Les idées qui sont promues dans nos sociétés modernes sont incompatibles avec nos objectifs climatiques : nous devons tous travailler, devons être encore et toujours plus productifs. Les outils que nous concevons, en informatique, font gagner de la productivité, mais cette productivité n’est pas rendue au travailleur pour du temps libre, elle est réinvestie en plus de croissance.

Produire, consommer, produire, consommer, produire… "pouvoir d’achat" et zéro chômage érigés en totems.

Ce que je constate donc tous les jours est une fuite en avant, doublée d’un déni, mais pire encore, une réaction exactement inverse à ce qu’il faudrait faire pour maintenir notre consommation sous les limites planétaires. Dès lors, la question de l’effondrement civilisationnel se pose : si tant est que nous prenions le virage, qu’en est-il des pays qui ne le feront pas et auront la capacité à nous menacer, précisément parce qu’ils auront fait le choix inverse ? C’est un dilemme auquel je n’ai pas de réponse, mais d’autres questions ne sont pas agréables à entendre :

Que ferons-nous lorsque les populations qui ne pourront plus vivre dans leur pays frapperont à notre porte ? Que ferons-nous lorsque nous ne pourrons plus importer nos médicaments, faute de moyens de transport longue distance ? Que ferons-nous lorsque l’Afrique nous demandera des comptes pour l’exploitation de ses ressources ? Que ferons-nous si nous n’avons plus de pétrole pour faire rouler nos tanks, voler nos avions et nous défendre contre des pays en quête de conquêtes territoriale, économique ou culturelle ? Que ferons-nous lorsque nous aurons tellement détruit nos services publics (transports, écoles, hôpitaux, production électrique) que nous serons dépendants de services commerciaux dont la seule survie ne dépend que de leurs marges ?

La mondialisation nous a paradoxalement mis dans une situation extrêmement précaire, l’Europe d’aujourd’hui est incroyablement fragile. Tout ça au nom de la sacro-sainte croissance, un concept qui rappelons-le, n’a aucun sens physique, c’est une pure construction mathématique permettant d’évaluer l’activité économique d’un pays.

Or, l’activité économique ne se mesure pas qu’à l’aube de ce qui s’achète. C’est pourtant ce que nous faisons tous les jours. Ainsi, le comptable qui travaille bénévolement dans une association humanitaire fait le même travail que le comptable qui travaille dans une entreprise d’extraction de minerais. L’un ne contribue pas au PIB, l’autre oui. Leurs contributions au bien-être de l’humanité sont elles pour autant comparables ?

Se passer de la croissance n’est pas sans poser des problèmes, tant elle est au cœur de notre société. Sans croissance, point de retraites, tout un monde de solidarité à réinventer ! Quelle entreprise accepterait volontairement de ne pas croître, alors que cela menacerait directement sa survie face aux concurrents qui, eux, prendront la décision de continuer ?

Pourtant, décroître l’industrie automobile, responsable d’une grande partie du réchauffement, de morts sur la route, de la pollution aux particules fines, ne serait que bénéfique pour notre société. Nous ne parlons pas de la supprimer du jour au lendemain, mais de planifier notre sortie. De même, l’opulence de agro-industrie devra disparaître, c’est une question de survie, au profit d’une agriculture raisonnée : produisons moins, mais de meilleure qualité, avec des revenus décents et moins de transformations. A court terme, achetons des Fairphone plutôt que des Vision Pro, achetons des produits réparables plutôt que des produits pas chers mais écologiquement aberrants. Les leviers sont nombreux, il faut "simplement" une volonté politique. Réapprenons à mutualiser, nous pouvons inventer de nouveaux modèles basés sur la coopération.

Plus nous attendons, moins les mesures que nous devrons prendre seront socialement acceptables et plus le risque de révolution sera important. Il n’est pas surprenant qu’on utilise l’expression d’écologie punitive, dans ce contexte, alors que le plus punitif, ce sont les conséquences directes de la surexploitation de notre environnement : inondations, sécheresses, maladies, incendies, … La punition est vécue de plein fouet par les agriculteurs qui perdent leurs récoltes, des entrepreneurs qui voient leur camping partir en fumée, des habitants qui voient leur habitation détruite par des inondations ou des tornades…

En conclusion, tout semble converger vers l’idée d’un crash massif à venir : c’est la combinaison du réchauffement climatique, de l’effondrement de la biodiversité, du mythe de la croissance infinie, de la montée du populisme et de la raréfaction des ressources fossiles qui rend cet avenir possible.

Jamais ne n’aurais pensé, lorsque je discutais dans cette cour de récréation, voir cela de mon vivant.

Désormais, non seulement je le vois venir mais je le vois de plus en plus probable. Je ne saurais quantifier, mais pour moi nous sommes sortis du domaine du possible pour entrer dans celui du probable. Je n’ai pas de solutions miracles, sans changement fondamental de notre mode de vie, mais j’ai des questions, des craintes et aussi un message à faire passer : il est encore temps. La première de nos libertés, c’est encore de voter, faisons le tant que nous en avons encore la possibilité.

Stacking and mosaic creation with JSol’Ex 2.0

04 January 2024

Tags: astronomy astro4j solex java

A couple days ago I have released JSol’Ex 2.0. This software can be used as an alternative to INTI to process solar images acquired using Christian Buil’s Sol’Ex. This new version introduces 2 new features that I would like to describe in more details in this blog post: stacking and mosaic stitching.

Stacking

Stacking should be something familiar to anyone doing planetary imaging. One of the most popular sofware for doing stacking is AutoStakkert! which has recently seen a new major version. Stacking usually consists of taking a large number of images, selecting a few reference points and trying to align these images to reconstruct a single, stacked image which increases the signal-to-noise ratio. Each of the individual images are usually small and there are a large number of images (since the goal is to reduce the impact of turbulence, typically, videos are taken with a high frame rate, often higher than 100 frames per second). In addition, there are little to no changes between the details of a series (granted that you limit the capture to a few seconds, to avoid the rotation of the planet typically) so the images are really "only" disformed by turbulence. In the context of Sol’Ex image processing, the situation is a bit different: we have a few captures of large images: in practice, capturing an image takes time (it’s a scan of the sun which will consist of a video of several seconds just to build a single image) and the solar details can move quickly between captures. In practice, it means that you can reasonably stack 2 to 5 images, maybe more if the scans are quick enough and that there are not too many changes between scans.

The question for me was how to implement such an algorithm for JSol’Ex? Compared to planetary image stacking, we have a few advantages:

-

images have a great resolution: depending on the camera that you use and the binning mode, you can have images which range from several hundreds pixels large to a few thousands pixels

-

the images are well defined : in planetary observation, there are a few "high quality" images in a video of several thousand frames, but most images are either fully or partially disformed

-

there’s little movement between images: anyone who has stacked a planetary video can see that it’s frequent to see jumps of several pixels between 2 images, just because of turbulence

-

for each image, we already have determined the ellipse which makes the solar disk and should also have corrected the geometry, so that all solar disks are "perfect circles"

Therefore, a naive approach, which I tried without success a few months ago, is a geometric approach where we simply make all solar disks the same (by resizing), align them then create an average image. To illustrate this approach, let’s look at some images:

In this video we can see that there is quite some movement visible between each image. Each of them is already of quite good quality, but we can notice some noise and more importantly, a shear effect due to the fact that a scan takes several seconds and that we reconstruct line by line :

The average image is therefore quite blurry:

Therefore, using the average image is not a good option for stacking and that’s why I recommended to use AutoStakkert! instead, which gave better results.

In order to achieve better results, I opted for a simple yet effective algorithm:

-

first, estimate the sharpness of each image. This is done by computing the Laplacian of each image. The image with the best sharpness is selected as the reference image

-

divide each image into tiles (by default, a tile has a width of 32 pixels)

-

for each tile, try to align it with the reference image by computing an error between the reference tile and the image

-

the error is based on root mean squared error of the pixel intensities : the better the tiles are aligned, the closer to 0 the error will be

-

this is the most expensive operation, because it requires computing the error for various positions

-

we’re only looking for displacements with a maximum shift of 2/3 of the tile size (so, by default, 21 pixels maximum)

-

if the shift between 2 tiles is higher than this limit, we won’t be able to align the tiles properly

We could have stopped here and already reconstruct an image at this stage, but the result wouldn’t be great: the fact that we use tiles would be visible at the edges of the tiles, with square artifacts clearly visible. To reduce the artifacts, I opted for a "sliding window" algorith, where the next tile will overlap the previous one by a factor between 0 (no overlap) and 1 (100% overlap). This means that for each pixel, we will get a "stack" of pixel values computed from the alignment of several tiles. The final pixel value is then computed by taking the median value of the stack. Even so, some stacking vertical or horizontal artifacts can still be sometimes visible, so the last "trick" I used is to build the stacks by only taking pixels within a certain radius, instead of the whole square.

The resulting, stacked image is here:

We can see that:

-

noise from the original images is gone

-

shearing artifacts are significantly reduced

-

the resulting image is not as blurry as the average version

There were, however, some compromises I had to make, in order to avoid that the stacking process takes too long. In particular, the tile alignment process (in particular error computation) is very expensive, since for each tile, we have to compute 21*21 = 441 errors by default. With an overlap factor of 0.3, that’s, for an image of 1024 pixels large, more than 5 million errors to compute. Even computing them in parallel takes long, therefore I added local search optimization: basically, instead of searching in the whole space, I’m only looking for errors within a restricted radius (8 pixels). Then, we take the minimal error of this area and resume searching from that position: step by step we’re moving "closer" to a local optimum which will hopefully be the best possible error. While this doesn’t guarantee to find the best possible solution, it proved to provide very good results while significantly cutting down the computation times.

From several tests I made, the quality of the stacked image matches that of Autostakkert!.

Mosaic composition

The next feature I added in JSol’Ex 2, which is also the one which took me most time to implement, is mosaic composition. To some extent, this feature is similar to stacking, except that in stacking, we know that all images represent the same region of the solar disk and that they are roughly aligned. With mosaics, we have to work with different regions of the solar disk which overlap, and need to be stitched together in order to compose a larger image.

On December 7th, 2024, I had given a glimpse of that feature for the french astrophotograhers association AIP, but I wasn’t happy enough with the result so decided to delay the release. Even today, I’m not fully satisfied, but it gives reasonable results on several images I tried so decided it was good enough for public release and getting feedback about this feature.

Mosaic composition is not an easy task: there are several problems we have to solve:

-

first, we need to identify, in each image, the regions which "overlap"

-

then for each image, we need to be able to tell if the pixel value we read at a particular place is relevant for the whole composition or not

-

then we have to do the alignment

-

and finally avoid mosaicing artifacts, typically vertical or horizontal lines at the "edges"

In addition, mosaic composition is not immune to the problem that each image can have different illumination, or even that the regions which are overlapping have slightly (or even sometimes significantly) moved between the captures. Therefore, the idea is to "warp" images together in order to make them stitch smoothly.

Preparing panels for integration

Here are the main steps of the algorithm I have implemented:

-

resize images so that they all solar disks have the same radius (in pixels), and that all images are square

-

normalize the histograms of each panel so that all images have similar lightness

-

estimate the background level of each panel, in order to have a good estimate of when a pixel of an image is relevant or not and perform background neutralization

-

there can be more than 2 panels to integrate. My algorithm works by stitching them 2 by 2, which implies sorting the panels by putting the panels which overlap the most in front, then stitching the 2 most overlapping panels together. The result of the operation is then stitched together with the next panel, until we have integrated all of them.

The stitching part works quite differently than with typical stacking. In stacking, we have complete data for each image: we "only" have to align them. With mosaics, there are "missing" parts in the image that we need to fill in. To do this, we have to identify which part of a panel can be blended into the reconstructed image in order to complete it. This means that the alignment process is significanly more complicated than with typical stacking, since we will work on "missing" data. Part of the difficulty is precisely identifying if something is missing or not, that is to say if the signal of a pixel in one of the panels is relevant to the composition of the final image. This is done by comparing it with the estimated background level, but that’s not the only trick.

Despite the fact that our panels are supposedly aligned and that the circles representing the solar disks are supposed to be the same, in practice, depending on the quality of the capture and the ellipse regression success, the disks may be slightly off, with deformations. There can even be slight rotations between panels (because of flexions at capture time, or processing artifacts). As a consequence, a naive approach consisting of trying to minimize the error between 2 panels by moving them a few pixels in each direction like in stacking doesn’t work:

-

first of all, while you may properly align one edge of the solar disk, we can see that some regions will be misaligned. If these regions correspond to high contrast areas like filaments, it gives real bad results. If it happens at the edges of the sun, you can even see part of the disk being shifted a few pixels away from the other panel, which is clearly wrong.

-

second, estimating the error is not so simple, since we have incomplete disks. And in this case, the error has to be computed on large areas, which means that the operation is very expensive.

-

third, because we have to decide whether to pick a pixel from one panel or the other, this has the tendency to create very strong artifacts (vertical or horizontal lines) at the stitching edges

The stitching algorithm

Giving all the issues I described above, I chose to implement an algorithm which would work similarly to stacking, by "warping" a panel into another. This process is iterative, and the idea is to take a "reference" panel, which is the one which has the most "relevant" pixels, and align tiles from the 2d panel into this reference panel.

To do this, we compute a grid of "reference points" which are in the "overlapping" area. These points belong to the reference image, and one difficulty is to filter out points which belong to "incomplete" tiles. Once we have these points, for each of them, we compute an alignment between the reference tile and the tile of the panel we’re trying to integrate. This gives us, roughly, a "model" of how tiles are displaced in the overlapping area. The larger the overlapping area is, the better the model will be, but experience shows that distorsion on one edge of the solar disk can be significanly different at the other edge.